Not an inherent problem with it, but they may well tell you seemingly inconsistent things (such as the Spearman correlation being significant and suggesting a relationship in one direction while the line - and the corresponding Pearson correlation - can be significant in the other direction). That's not a problem if you don't treat it as one. (For example, if you're doing the two things for different purposes, it may not matter that they suggest different directions of relationship, albeit of a different kind.)

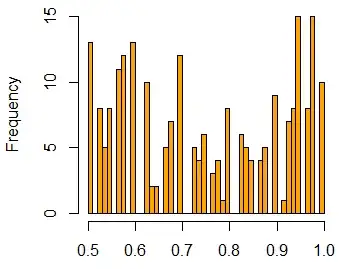

Here's a data set for which that's the case:

x:

-0.154 -1.614 0.200 -1.099 -1.337 -0.668 -1.289 -2.257 -0.601 0.411

-1.444 1.454 -0.443 -0.114 -0.122 -0.527 -0.305 0.122 0.199 -0.940

-1.776 0.422 2.245 1.550 -0.557 -0.261 0.275 -0.310 -0.367 0.459

30.000

y:

-0.947 1.367 0.703 0.409 0.474 -0.147 0.302 2.069 -0.210 0.535

-0.124 -1.031 -0.192 -0.058 0.363 -0.218 1.079 -1.083 -1.676 1.250

0.759 -1.058 -2.183 -0.741 -0.226 -0.912 0.401 0.997 -0.171 -1.901

30.000

Stated differently, do I have to stick to using only parametric or only non-parametric methods for this variable (choosing one or the other)?

It's possible to do things that are both parametric and nonparametric at the same time (in different ways)

For example, you can fit straight lines using nonparametric correlations. One way to do this is to choose the slope that makes the nonparametric correlation between residuals and the predictor (IV, x-variable) equal to 0.

This is parametric in the relationship between x and y (it fits a straight line, which has two parameters) but the distributional model for the errors is nonparametric.

[There's also regressions that are parametric in the distributional model but nonparametric in the relationship between x and y; kernel or spline regression models for example.]

If you use Kendall correlation instead, you essentially have a Theil-Sen regression line (about which there are many posts on site).

For a more detailed explanation of such an approach (including an example), see here:

If linear regression is related to Pearson's correlation, are there any regression techniques related to Kendall's and Spearman's correlations?

There are any number of robust or nonparametric or non-Gaussian-but-parametric ways to fit straight lines.

It should be possible to consider analyses that are more alike; if I don't have a good reason to assume normality in one instance, I don't have it in another*; and there's no reason you have to assume it in either.

It would be possible to consider a different parametric model, and to use both linear (Pearson) correlation and linear regression, or perhaps not to assume parametric model and still assume linearity. It should also be possible not to assume linearity but something more general (like monotonicity) for both.

* though take care; what is assumed to be normal in each instance is not the same thing.