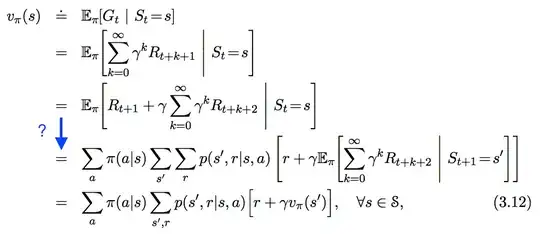

My question is similar to this one. I am trying to fill in the missing steps of the Bellman equation for a Markov Decision Process:

I will focus on the first term of the sum $R_{t+1} + \gamma \sum_{k=0}^{\infty}\gamma^kR_{t+k+2}.$

Here's my attempt:

I will focus on the first term of the sum $R_{t+1} + \gamma \sum_{k=0}^{\infty}\gamma^kR_{t+k+2}.$

Here's my attempt:

$$ \begin{align} & E_{\pi}[R_{t+1}|S_t=s] \\ &= \sum_{s'} \sum_a E_{\pi}[R_{t+1}|S_t=s, S_{t+1}=s',A_t=a]P(S_{t+1}=s',A_t=a)\\ & =\sum_{s', a, r} rP(R_{t+1}=r|S_t=s, S_{t+1}=s', A_t=a)P(S_{t+1}=s', A_t=a)\\ &= \sum_{s', a, r} r \frac{P(R_{t+1}=r,S_t=s, S_{t+1}=s', A_t=a)}{P(S_t=s, S_{t+1}=s',A_t=a)}P(S_{t+1}=s', A_t=a)\\ &= \sum_{s', a, r} r \frac{P(S_{t+1}=s', R_{t+1}=r|S_t=s, A_t=a)P(S_t=s,A_t=a)}{P(S_t=s, S_{t+1}=s',A_t=a)}P(S_{t+1}=s', A_t=a)\\ &= \sum_{s', a, r} r \frac{p(s',r|s,a)P(A_t=a|S_t=s)P(S_t=s))}{P(S_t=s, S_{t+1}=s',A_t=a)}P(S_{t+1}=s', A_t=a)\\ &= \sum_aP(A_t=a|S_t=s)\sum_{s',r}r p(s',r|s,a) \frac{P(S_t=s)P(S_{t+1}=s', A_t=a)}{P(S_t=s, S_{t+1}=s', A_t=a)} \end{align}$$

Some questions:

Is it true that $P(S_t=s, S_{t+1}=s', A_t=a) = P(S_t=s)P(S_{t+1}=s', A_t=a)$? I am under the impression that the next state depends on both the action taken and the previous state? I.e. are these events independent or not?

If we do have independence, then is my derivation correct? Is there a simpler way to show the result?

I'm also a bit confused about the two underlying distributions here: $p$ and $\pi$. Are they in no way related to each other? Isn't it possible that $p(s',r|s,a) = P(S_{t+1}=s', R_{t+1}=r|S_t=s,A_t=a)$ induces a distribution on $P(A_t=a|S_t=s) = \pi(a|s)$?