So, this question is somewhat involved but I have painstakingly tried to make it as straight-forward as possible.

Goal: Long story short, there is a derivation of negentropy that does not involve higher order cumulants, and I am trying to understand how it was derived.

Background: (I understand all this)

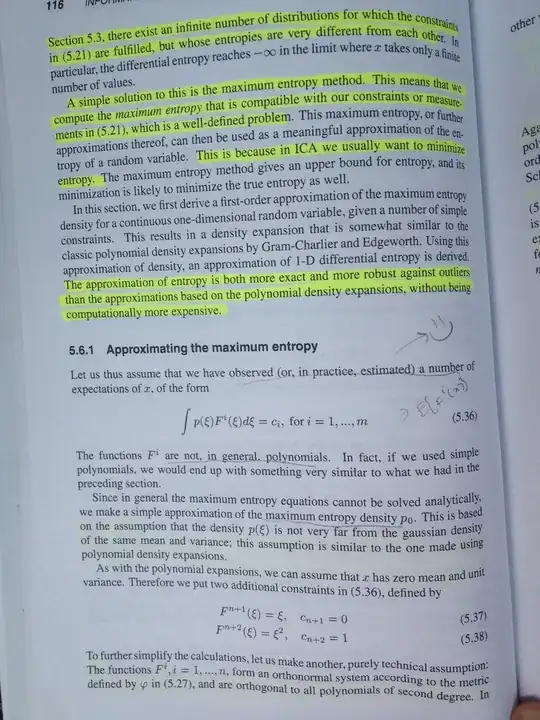

I am self-studying the book 'Independent Component Analysis', found here. (This question is from section 5.6, in case you have the book - 'Approximation of Entropy by Nonpolynomial Functions').

We have $x$, which is a random variable, and whose negentropy we wish to estimate, from some observations we have. The PDF of $x$ is given by $p_x(\zeta)$. Negentropy is simply the difference between the differential entropy of a standardized Gaussian random variable, and the differential entropy of $x$. The differential entropy here is given by $H$, such that:

$$ H(x) = -\int_{-\infty}^{\infty} p_x(\zeta) \: log(p_x(\zeta)) \: d\zeta $$

and so, the negentropy is given by

$$J(x) = H(v) - H(x)$$

where $v$ is a standardized Gaussian r.v, with PDF given by $\phi(\zeta)$.

Now, as part of this new method, my book has derived an estimate of the PDF of $x$, given by:

$$ p_x(\zeta) = \phi(\zeta) [1 + \sum_{i} c_i \; F^{i}(\zeta)] $$

(Where $c_i = \mathbb{E}\{F^i(x)\}$. By the way, $i$ is not a power, but an index instead).

For now, I 'accept' this new PDF formula, and will ask about it another day. This is not my main issue. What he does now though, is plug this version of the PDF of $x$ back into the negentropy equation, and ends up with:

$$ J(x) \approx \frac{1}{2}\sum_i\mathbb{E} \{F^i(x)\}^2 $$

Bear in mind, the sigma (here and for the rest of the post), just loops around the index $i$. For example, if we only had two functions, the signal would loop for $i=2$ and $i=2$. Of course, I should tell you about those functions he is using. So apparently, those functions $F^i$ are defined as thus:

The functions $F^i$ are not polynomial functions in this case. (We assume that the r.v. $x$ is zero mean, and of unit variance). Now, let us make some constraints and give properties of those functions:

$$ F^{n+1}(\zeta) = \zeta, \: \: c_{n+1} = 0 $$

$$ F^{n+2}(\zeta) = \zeta^2, \: \: c_{n+1} = 1 $$

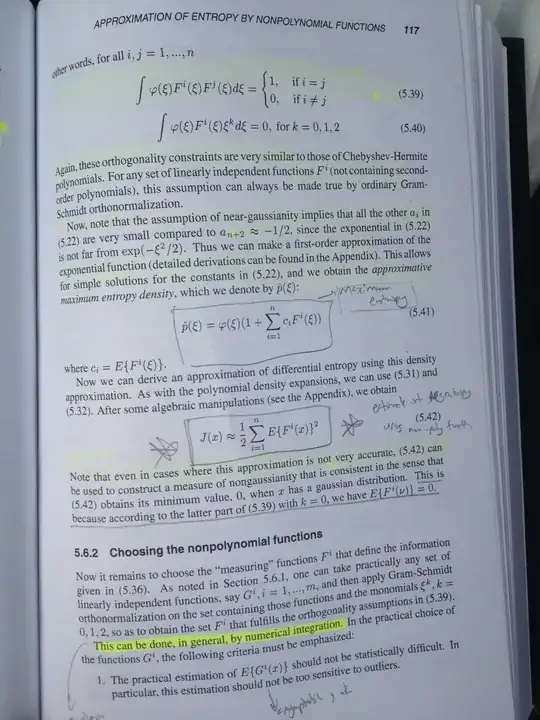

To simplify calculations, let us make another, purely technical assumption: The functions $F^i, i = 1, ... n$, form an orthonormal system, as such:

$$ \int \phi(\zeta) F^i(\zeta)F^j(\zeta)d\zeta= \begin{cases} 1, \quad \text{if } i = j \\ 0, \quad \text{if } i \neq j \end{cases} $$

and

$$ \int \phi(\zeta)F^i(\zeta)\zeta^k d(\zeta) = 0, \quad \text{for } k = 0,1,2 $$

Almost there! OK, so all that was the background, and now for the question. The task is to then, simply place this new PDF into the differential entropy formula, $H(x)$. If I understand this, I will understand the rest. Now, the book gives the derivation, (and I agree with it), but I get stuck towards the end, because I do not know/see how it is cancelling out. Also, I do not know how to interpret the small-o notation from the Taylor expansion.

This is the result:

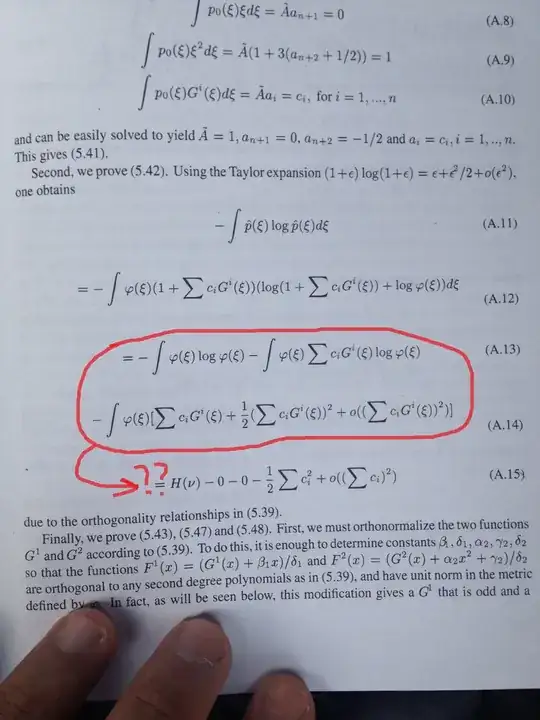

Using the Taylor expansion $(1+\epsilon)log(1+\epsilon) = \epsilon + \frac{\epsilon^2}{2} + o(\epsilon^2)$, for $H(x)$ we get:

$$ H(x) = -\int \phi(\zeta) \; (1 + \sum c_i F^i(\zeta)) \; (log(1 + \sum c_i F^i(\zeta) + log(\zeta)) \; d(\zeta) \\ = -\int \phi(\zeta) log(\zeta) -\int \phi(\zeta) \sum c_i F^i(\zeta) log(\phi(\zeta)) -\int \phi(\zeta) \; [\sum c_i F^i(\zeta) + \frac{1}{2}(\sum c_i F^i(\zeta))^2 + o((\sum c_i F^i(\zeta))^2)] $$

and so

The Question: (I don't understand this) $$ H(x) = H(v) - 0 - 0 -\frac{1}{2}\sum c_i^2 + o((\sum c_i)^2 $$

So, my problem: Except for the $H(v)$, I don't understand how he got the final 4 terms in the last equation. (i.e., the 0, the 0, and the last 2 terms). I understand everything before that. He says he has exploited the orthogonality relationships given in the properties above, but I don't see how. (I also don't understand the small-o notation here, in the sense of, how it is used?)

THANKS!!!!

EDIT:

I have gone ahead and added the images from the book I am reading, it pretty much says what I said above, but just in case someone needs additional context.

And here, marked in red, is the exact part that is confusing me. How does he use the orthogonality properties to get that last part, where things are cancelling out, and the final summations involving $c_i^2$, and the small-o notation summation?