Modeling questions should be based first on the science that underlies the question with computer output used as a helpful tool.

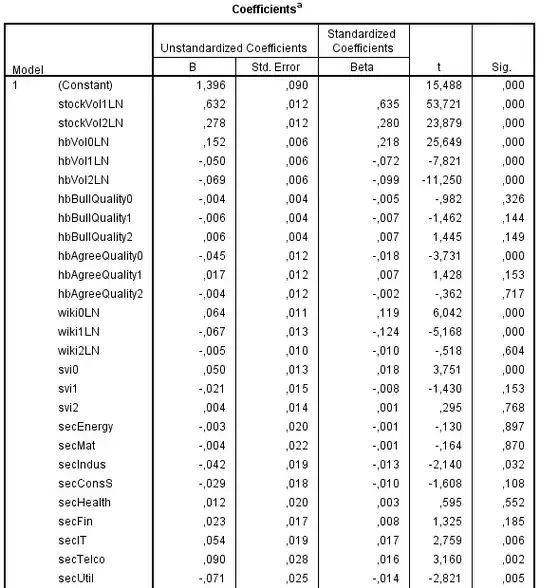

The above information in not enough to come to a final model, and may not be enough for the next step.

It is possible for 2 variables to both be non-significant when adjusted for other terms in the model, but be very important when the other is deleted. Consider if you have as predictors height in inches and height in centimeters (with some rounding so that they are not exactly the same). Models that include both predictors will probably tell you that both are redundant given the other, but remove one and the other may be very important (removing both because of high p-values would be a major mistake).

You can also have variables that work synergistically, each by themselves is not very predictive, but together they are. The diameter of the arteries in the neck (which may be related to anurisms and other blood-brain conditions) is related to the difference between systolic blood pressure and diastolic blood pressure. Either measure on its own may have only a weak relationship, but together it can be much stronger.

Also consider this case, you have 2 predictors X1 and X2, the statistical analysis suggests that you only need one of the 2 in your model and that X1 does a slightly better job of predicting your Y variable (maybe an $R^2=0.81$ vs. $R^2=0.80$), but X2 is something quick, easy, and non-invasive to measure (temperature, or blood pressure) while X1 is the result of a lab test that takes several hours on a biopsy that requires major surgery to obtain (and would not be done most times other than to collect X1); which is the better predictor to use?

Before deciding on a final model you need to spend more time deciding why you are doing the modeling (understanding relationships, prediction, etc.) and the science behind the question and data. You should probably spend more time in a regression class or with a textbook (just ignoring the "nonsignificant" predictors is not the same as fitting the model with only the significant predictors).