Regularization does not guarantee to reduce overfit.

Regularization reduces overfit in many cases because in these cases the real data model (e.g., physics models) have small weights. Reguarlization is a way to inject this knowledge in our model. It weeds out those models that have large weights, which tend to be models that overfit.

However, in simulation, you can definitely construct a model that have large weights and generate data from it. Regularization may not work well with this kind of data. Regularization will create a large bias in this case, I guess.

But this kind of data is rare in real world.

The intuition what I think of is that not much weight is given to a particular feature. But isn't it sometimes necessary to focus on one feature (like in the above example x1)?

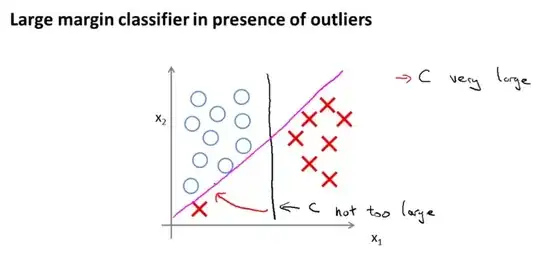

Edit: You can make the classifier give more weight to $x1$ by scaling $x1$ back by a factor of, say, 10. Then the black boundary will get very close to the magenta boundary. This is equivalent to discounting the component on the $x1$ dimension in the calculation of the Euclidean distance, but what makes the red cross on the left an outlier is largely because it is distant from the rest of the datapoints in the same class, in $x1$ dimension. By scaling $x1$ down, we are reducing the contribution of the outlier in the total loss.

Actually, we cannot tell from this example whether the magenta boundary is an overfit. This is the same as saying that we cannot be sure if the red cross on the bottom left is an outlier. We have to see other samples (like a test set) to be more certain.

Just for the sake of discussion, suppose we are sure that the red cross on the left is indeed an outlier. The problem, I believe, if not that we are not giving enough weight on one particular feature, it is that with a hard margin classifier, the decision boundary is sensitive to outliers, because most of the cost comes from one single datapoint. Regularization does not really help in this situation. A soft-margin classifier, which involves more datapoints in determining the decision boundary, will result in a boundary close to the one marked in black.