I'm reading about best subset selection in the Elements of statistical learning book. If I have 3 predictors $x_1,x_2,x_3$, I create $2^3=8$ subsets:

- Subset with no predictors

- subset with predictor $x_1$

- subset with predictor $x_2$

- subset with predictor $x_3$

- subset with predictors $x_1,x_2$

- subset with predictors $x_1,x_3$

- subset with predictors $x_2,x_3$

- subset with predictors $x_1,x_2,x_3$

Then I test all these models on the test data to choose the best one.

Now my question is why is best subset selection not favored in comparison to e.g. lasso?

If I compare the thresholding functions of best subset and lasso, I see that the best subset sets some of the coefficients to zero, like lasso.

But, the other coefficient (non-zero ones) will still have the ols values, they will be unbiasd. Whereas, in lasso some of the coefficients will be zero and the others (non-zero ones) will have some bias.

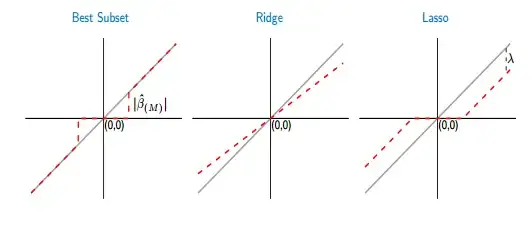

The figure below shows it better:

From the picture the part of the red line in the best subset case is laying onto the gray one. The other part is laying in the x-axis where some of the coefficients are zero. The gray line defines the unbiased solutions. In lasso, some bias is introduced by $\lambda$. From this figure I see that best subset is better than lasso! What are the disadvantages of using best subset?