I codded my PDF function for the multivariate gaussian (3D) as such:

def Gaussian3DPDF(v, mu, sigma):

N = len(v)

G = 1 / ( (2 * np.pi)**(N/2) * np.linalg.norm(sigma)**0.5 )

G *= np.exp( - (0.5 * (v - mu).T.dot(np.linalg.inv(sigma)).dot( (v - mu) ) ) )

return G

It works pretty well and it's quite fast. But I now want to fit my data to this function using a least square optimizer, and to be efficient I need to be able to pass a Mx3 matrix ( [[x0,y0,z0],[x1,...],...] )

However, my dot product will break down because now it's not 3 dot 3x3 dot 3 but 3xM dot 3x3 dot Mx3

I'm not very good with linear algebra.

Is there a trick I am not aware of ?

Thanks a lot

PS: Doing a for loop over each coordinate work but it is way way too slow for fitting on large number of data I have.

PPS: I found out about the scipy stats multivariate gaussian function. It works fine ! Though I'm still interested if anyone knows the answer ! :D

EDIT:

The code to do this in python without linear algebra:

#cube = np.array of dimention NxMxO

def Gaussian3DPDFFunc(X, mu0, mu1, mu2, s0, s1, s2, A):

mu = np.array([mu0, mu1, mu2])

Sigma = np.array([[s0, 0, 0], [0, s1, 0], [0, 0, s2]])

res = multivariate_normal.pdf(X, mean=mu, cov=Sigma)

res *= A

res += 100

return res

def FitGaussian(cube):

# prepare the data for curvefit

X = []

Y = []

for i in range(cube.shape[0]):

for j in range(cube.shape[1]):

for k in range(cube.shape[2]):

X.append([i,j,k])

Y.append(cube[i][j][k])

bounds = [[3,3,3,3,3,3,50], [cube.shape[0] - 3, cube.shape[1] - 3, cube.shape[2] - 3, 30, 30, 30, 100000]]

p0 = [cube.shape[0]/2, cube.shape[1]/2, cube.shape[2]/2, 10, 10, 10, 100]

popt, pcov = curve_fit(Gaussian3DPDFFunc, X, Y, p0, bounds=bounds)

mu = [popt[0], popt[1], popt[2]]

sigma = [[popt[3], 0, 0], [0, popt[4], 0], [0, 0, popt[5]]]

A = popt[6]

res = multivariate_normal.pdf(X, mean=mu, cov=sigma)

return mu, sigma, A, res

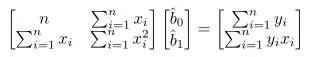

For linear algebra look at bellow ! Really Cool