I am using KDE with a modified metric for the distance. The PDF is as expected (see below: color is the probability and the dot is the point used to fit the KDE). But due to the new metric, I cannot use the usual sampling methods as they suppose a gaussian kernel with $(||\textbf{x}-\textbf{x}_i||)$. Here I have like something like $1/(||\textbf{x}-\textbf{x}_i||)$. So, the new kernel would looks like:

$$K(\textbf{x}-\textbf{x}_i) = \exp\left(\frac{-1}{||\textbf{x}-\textbf{x}_i||*h}\right)$$

This kernel does not integrate to 1 so I bound it in the unit hypercube and I want to sample in this hypercube.

- How to generate new sample in this context?

From what I read (Find CDF from an estimated PDF (estimated by KDE), for instance), I have to come up with the multivariate inverse CDF.

- Is there a simple way to do this?

For now I just use a hack which consists in:

- Sample the multivariate space and get PDF values

- Then I use these two information with a uniform random generator to give me a sample.

It works but will get the curse of dimensionality. Something like this: https://stackoverflow.com/q/25642599

Even the CDF in a multivariate space would be enough I guess as I would be able to use some fixed point for example to do the inverse.

EDIT

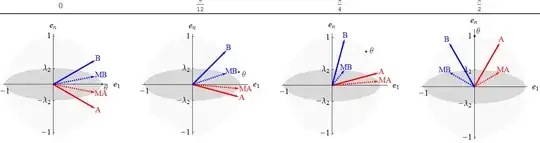

Here is the result of this experiment. Color is the probability, black point is the point used for fitting the KDE and red points are samples generated using Metropolis-Hasting MCMC.