Let's take a simple example to illustrate how both approaches work.

Imagine that you have 3 classifiers (1, 2, 3) and two classes (A, B), and after training you are predicting the class of a single point.

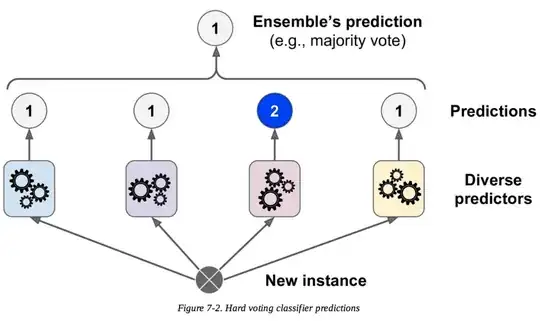

Hard voting

Predictions:

Classifier 1 predicts class A

Classifier 2 predicts class B

Classifier 3 predicts class B

2/3 classifiers predict class B, so class B is the ensemble decision.

Soft voting

Predictions

(This is identical to the earlier example, but now expressed in terms of probabilities. Values shown only for class A here because the problem is binary):

Classifier 1 predicts class A with probability 99%

Classifier 2 predicts class A with probability 49%

Classifier 3 predicts class A with probability 49%

The average probability of belonging to class A across the classifiers is (99 + 49 + 49) / 3 = 65.67%. Therefore, class A is the ensemble decision.

So you can see that in the same case, soft and hard voting can lead to different decisions. Soft voting can improve on hard voting because it takes into account more information; it uses each classifier's uncertainty in the final decision. The high uncertainty in classifiers 2 and 3 here essentially meant that the final ensemble decision relied strongly on classifier 1.

This is an extreme example, but it's not uncommon for this uncertainty to alter the final decision.