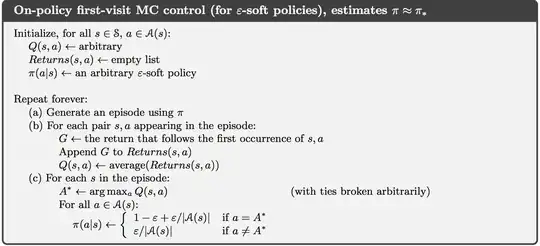

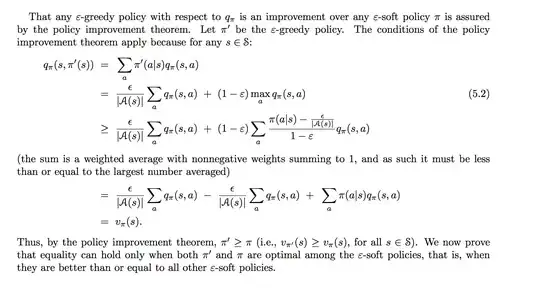

I'm confused about one step of the e-greedy Monte Carlo control proof on page 83 of Sutton and Barto Reinforcement Learning.

The book annotates saying "(the sum is a weighted average with nonnegative weights summing to 1, and as such it must be less than or equal to the largest number averaged)"

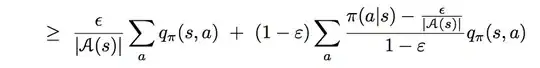

I understand that this substitution into the summation is essentially a max in the context of this problem with some properties allowing it to simplify nicely.

My question is why does this substitution make the right side of the equation less than or equal to the state-action value q(a,s)?

My thinking was because this weight acts like a max and because the value function is following the same policy, wouldn't this continue to be equal to q(a,s)?

I've included the full proof from Sutton and Barto and the algorithm below. Thanks!