Batch size and the number of iterations are considered as a tradeoff.

It has been observed in practice that when using a larger batch there is a significant degradation in the quality of the model, as measured by its ability to generalize.

(...)

In contrast, small-batch methods consistently converge to flat minimizers, and our experiments support a commonly held view that this is due to the inherent noise in the gradient estimation.

source, found on this thread answer by Frank Dernoncourt.

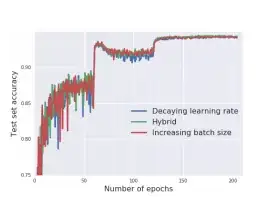

What if, like when we reduce the learning rate when approaching local minima, we increase the batch size over the number of epochs ? Or increasing it when the loss isn't moving significantly (Netsterov like) ?