Let $X_1, \dots, X_n$ be iid. $\text{Uniform}[-\theta,\theta]$. I need to find the complete sufficient statistic for $\theta$ or prove there does not exist such.

I know that $T = (X_{(1)}, X_{(n)} )$ is a sufficient statistic for $\theta$ but it is not a complete sufficient statistic.

I want to prove it. So first I tried to use the Basu's theorem . But in this case $R = X_{(n)} - X_{(1)}, $ is not an ancillary statistic.

So I tried prove using the definition of the complete sufficient statistic.

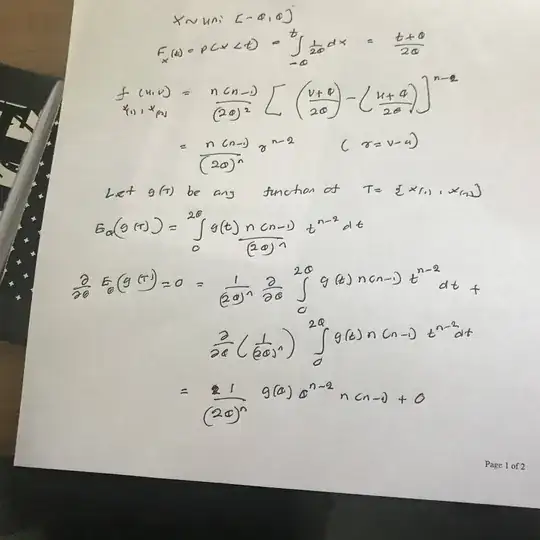

Here I have attached my work so far:

But by doing like this , seems like that I am going to prove that $T$ is a complete sufficient statistic.

So can someone help to figure it out what I did incorrectly ?