I use LSTM network in Keras. During the training, the loss fluctuates a lot, and I do not understand why that would happen.

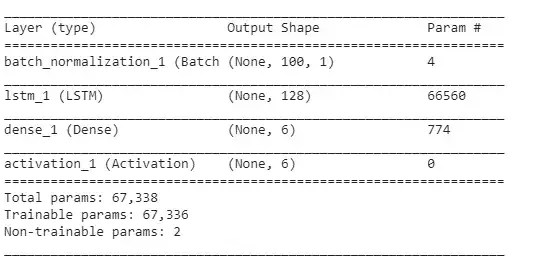

Here is the NN I was using initially:

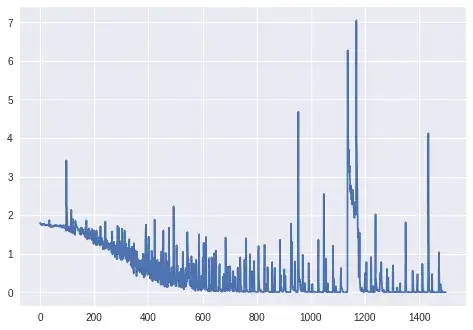

And here are the loss&accuracy during the training:

(Note that the accuracy actually does reach 100% eventually, but it takes around 800 epochs.)

I thought that these fluctuations occur because of Dropout layers / changes in the learning rate (I used rmsprop/adam), so I made a simpler model:

I also used SGD without momentum and decay. I have tried different values for lr but still got the same result.

sgd = optimizers.SGD(lr=0.001, momentum=0.0, decay=0.0, nesterov=False)

But I still got the same problem: loss was fluctuating instead of just decreasing. I have always thought that the loss is just suppose to gradually go down but here it does not seem to behave like that.

So:

Is it normal for the loss to fluctuate like that during the training? And why it would happen?

If not, why would this happen for the simple LSTM model with the

lrparameter set to some really small value?

Thanks. (Please, note that I have checked similar questions here but it did not help me to resolve my issue.)

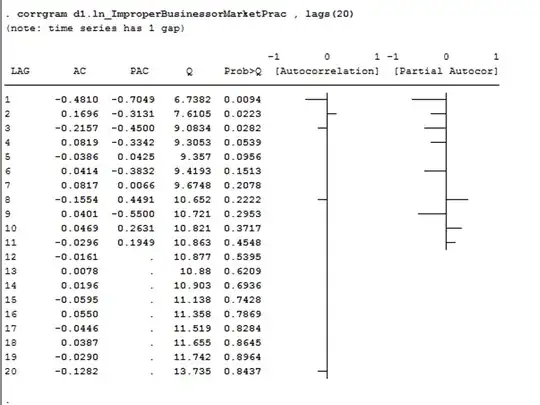

Upd.: loss for 1000+ epochs (no BatchNormalization layer, Keras' unmodifier RmsProp):

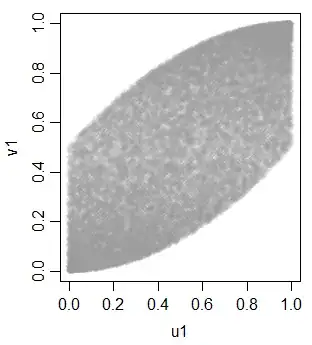

Upd. 2: For the final graph:

model.compile(loss='categorical_crossentropy', optimizer='rmsprop', metrics=['accuracy'])

history = model.fit(train_x, train_y, epochs = 1500)

Data: sequences of values of the current (from the sensors of a robot).

Target variables: the surface on which robot is operating (as a one-hot vector, 6 different categories).

Preprocessing:

- changed the sampling frequency so the sequences are not too long (LSTM does not seem to learn otherwise);

- cut the sequences in the smaller sequences (the same length for all of the smaller sequences: 100 timesteps each);

- check that each of 6 classes has approximately the same number of examples in the training set.

No padding.

Shape of the training set (#sequences, #timesteps in a sequence, #features):

(98, 100, 1)

Shape of the corresponding labels (as a one-hot vector for 6 categories):

(98, 6)

Layers:

The rest of the parameters (learning rate, batch size) are the same as the defaults in Keras:

keras.optimizers.RMSprop(lr=0.001, rho=0.9, epsilon=None, decay=0.0)

batch_size: Integer or None. Number of samples per gradient update. If unspecified, it will default to 32.

Upd. 3:

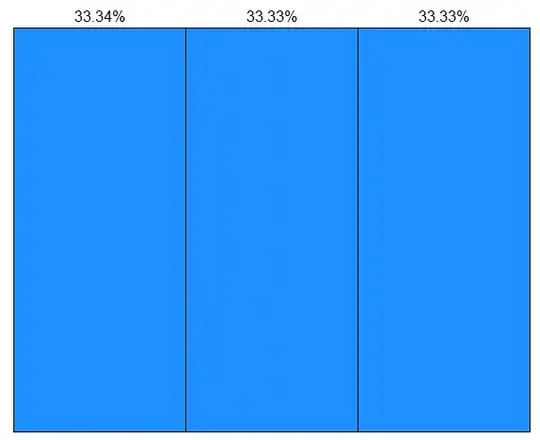

The loss for batch_size=4:

For batch_size=2 the LSTM did not seem to learn properly (loss fluctuates around the same value and does not decrease).

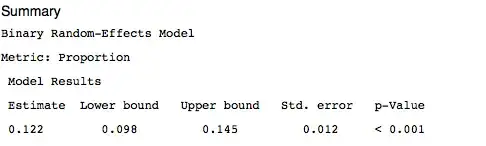

Upd. 4: To see if the problem is not just a bug in the code: I have made an artificial example (2 classes that are not difficult to classify: cos vs arccos). Loss and accuracy during the training for these examples: