In general it is said that the more data you take, the better it is, does it hold in case of a time series as well?

Yes it does.

Patterns change a lot over time, so it may not be wise to consider data points that are too old, say older than 2 years.

Yes patterns do change over time, however the more sophisticated forecasting methods are able to take that into account - so you should still feed them as much data as possible. The only situation where you would be feeding "too much data" to your model is if you get to the point where you are going to overwhelm the computational resources available to you. That is not going to happen with 5 years of monthly data (i.e. 60 data points).

As for the long term changing patterns, typically a time series is decomposed into 3 components: A trend (the long term variation), a seasonal component, and residuals. Some methods, like Triple/Seasonal Exponential Smoothing models try to model this directly. There are also Double Exponential Smoothing models which consider only a trend, but don't consider any seasonality.

Another way of looking at it is in terms of stationarity: A (weakly) stationary time series is one whose statistical properties (mean, variance) remain the same over time. To your point, real life time series data changes over time and is non-stationary. So some methods (namely ARIMA models) will first transform the data into a stationary time series using differencing, try to predict the future values of the stationary series, and then reverse transform the forecasts (to bring back the effect of the long term variations into the model).

As already mentioned, I am looking for a statistical method (if one exists) to find an optimal time window for training.

You needs to be careful here, because based on your question, you seem to be confusing two separate concepts. The optimal time window for training is not the same thing as the amount of data you should use.

- The overal amount of data that you should use to train your model is all of it, as I explained above.

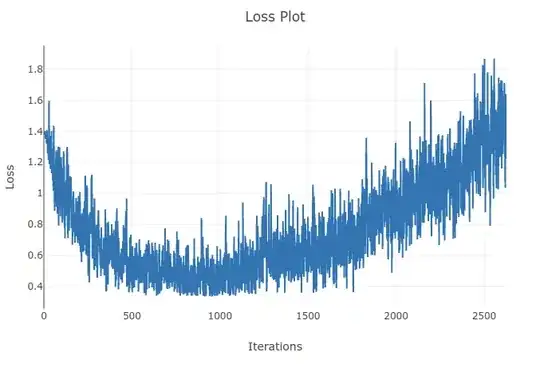

- The optimal time window for training is not a fixed concept and is going to depend on the method you use for forecasting and the business case you are dealing with. For 5 years of monthly sales data, your data is most likely going to have a a seasonality of 12. On top of that, some forecasting methods require that you specify the number of lagged time periods used to build your model. This is the case with ARIMA models and Neural Network based forecasting models for example.

Again the number of lagged time periods is not the same thing as the total amount of historical data used. The number of $k$ lagged time periods assumes that at any given point in time, the value of my series $X_t$ is determined by at most by the values of $X_{t-1}$, $X_{t-2}$,...,$X_{t-k}$.

Consider a simple auto-regressive model of order 3 ($AR(3)$) of a time series (note this model is oversimplified ):

$X_t = \alpha_1 X_{t-1} + \alpha_2 X_{t-2} + \alpha_3 X_{t-3}$

So $X_t$ depends only on the last three periods (that is my optimal window so to speak) - but I still need to use my entire history, not just the data from the 3 last months, to find the best estimate of $\alpha_1$, $\alpha_2$ and $\alpha_3$.

To answer your final question: Statistical methods for finding the number of lags are the ACF and the PACF. They are usually applied only after the time series has been made stationary. Seasonality is usually something that you can figure out from your business case (monthly dales data will have a seasonality of 12, hourly restaurant customer data will have a seasonality of 24, Daily customer data at a movie theater will have a seasonality of 7, etc...)