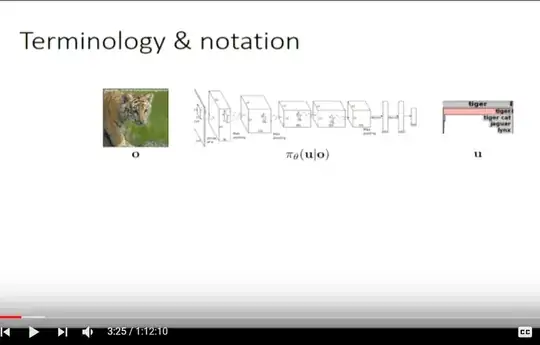

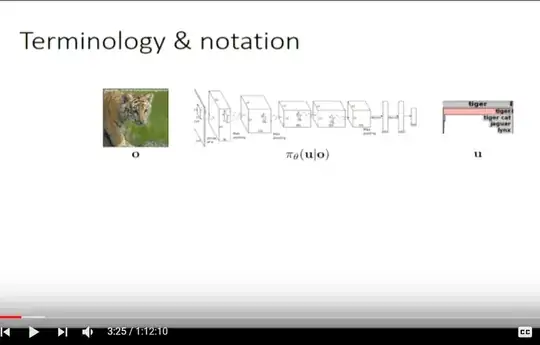

In order to answer the question, you need to understand what distribution the lecturer is talking about in the video you linked to in the question. For example, he talks about the "distribution of the labels conditional on the image":

$$\pi_\theta(\texttt u|\texttt o)$$

where $\theta$ - (hyper)parameters of NN, $\texttt u$ - labels and $\texttt o$ - images. The output $\texttt u$ could be an action of a self-driving car etc.

Here we're talking about a univariate distribution of scalar labels $\texttt u$. The fact that our inputs $\texttt o$ are called "tensors" in ML lingo, doesn't make this a multivariate or joint distribution. We're not talking about the distribution of the inputs, but we're conditioning our output distribution on inputs (data).

So, in this case when we talk about IID data, we mean that this distribution should not depend on the image in a sequence, it should be the same $\pi_\theta$ for any input/output pair $o_i,u_i$.

Is this a reasonable assumption? I would argue that it depends on the dataset.

Suppose that we're talking about color labels on the objects. If your images were tagged by color blind and not color blind people, then clearly at the very least $\pi_\theta$ may not be the same for all images and the "ID" (identically distributed) will drop out from IID. Here, I'm assuming you don't know whether your tagger was color blind or not. If the sample is random, then the first "I" (independent) still probably stays.

On the other hand, if our data set is with "cat images", then IID assumption will sound more reasonable: almost anybody who's asked to tag the images will probably be able to recognize a cat on the image as well as anyone else, for instance.

Summarizing, it's quite easy to break IID assumption if you're not careful, e.g. the sample is not random. However, in many cases you can build a data set which will satisfy IID assumption. I would argue that you can construct a sample from NIST database of handwritten digits and assume that the labels are IID.

Where does $\pi_\theta$ come from?

I see a lot of confusion in comments as to the nature of the probability distribution $\pi_\theta$ and even its role in image recognition problem. Let's take a closer look at how statistical learning problem is set up. Suppose that our goal is to recognize the hand written digits.

IF our goal was to recognize the digits from images in MNIST database THEN this would be a pure IT problem. Say, there is 70,000 images of single digits in MNIST, then we'd create a map with 70,000 entries. The key in the map (dictionary) is the image, and the value is one of 10 digits. The recognition reduces to simply finding an input image by bitwise comparison to keys, and pulling the value. This is the case when we treat MNIST database as the population, i.e. every possible outcome.

Obviously, this is not a useful approach, because we want to recognize the digits on any picture, not just the ones in MNIST database. Hence, MNIST database is just a sample from the infinite size population. In order to appreciate the difference with previous approach consider the following image from MNIST. Which digit is it, three or five?

The points are:

Consider how this data set was constructed and you'll see where the disturbances (errors) come from:

- NIST collected writing samples from hundreds of US Census employees and high school students. Each writer writes digits differently than anybody else.

- every time we write a digit it's slightly different that previous time.

- Camera placement or slight difference in how form is placed into a scanner

- The exact cropping of the image from the scan or photo

- The lighting will be different when photo copy or camera shot is done.

- The censor in the copier or camera has thermal noise in pixels.

- Errors while tagging images

- etc.

All these factors lead to variations and distortions of the ideal digit image every time we get picture of it. When you have an infinite population that comprises of countless number of factors impacting the final outcome probabilistic approaches start making a sense.

This is why the statistical learning in image recognition sounds like a reasonable idea to try. It's not the only possible way to solve the problem, but it had a huge rate of success in recent years.