When two equestrian judges are judging an equestrian event they each give their marks for every horse/rider combination in the event. Let's assume that there are 20 horses being judged. However, in comparing their scores, the judges might get a very poor correlation (eg. R<0) in terms of, say, the overall top-scoring, place-getting, 8 horses, (ie based on the average of both judges' scores), but they get a very high correlation (eg. R>0) in terms of their scoring of the remaining 12, low-scoring horses. As a result, the aggregate correlation coefficient (eg. R>0) might still well show that the two judges were "quite well aligned' in terms of their overall scoring of the event whereas we know that they did not correlate consistently if we were to analyse various subsets of data. So what conclusion can we make about the degree of "alignment" between these two judges (which is the key question we are trying to solve")? Can we still say that, over the entire data set "they are well aligned in their judging" or do we say that the data provides no conclusion as to their degree of alignment, given that there are significant differences in R in respect of various subsets of the data?!" Maybe the question might also be, if this is the sort of data we are looking at and trying to decide just how "aligned" the judges are in their scoring of the horses, is correlation the best statistic to use?

-

I'd explain how equestrian judging takes place and what constraints there are as many people (certainly myself) will not know any details of that. Consider a judging system in which one judge is always more generous than another by a fixed amount, say 3 points more. Then the correlation is perfect but the scores are not identical. If you want correlation to serve as a measure of agreement, then it is flawed for that purpose. Otherwise said, I would welcome a clearer statement of the aims of your analysis. – Nick Cox Apr 08 '18 at 11:25

-

I'd expect a careful analysis to show interesting fine structure such as agreement on better horses and disagreement on the others. In general, no single numerical measure of relationship can capture details like that adequately. – Nick Cox Apr 08 '18 at 11:26

-

In terms of the Pearson correlation statistic the degree of generosity is already taken into account by the intercept term in the linear equation. Yes, one judge may be more "generous" than the other. Certainly, if that were so, that would be a good reason for the two judges to have a discussion as to why they differed in terms of the MAGNITUDE of their marks. HOWEVER, what is more important for me is to know how the judges have RELATIVELY assessed riders in terms of their scores. Incidentally, I also conduct my analysis in terms of judge rankings but really the same subset query arises. – Denis W Apr 08 '18 at 11:53

-

Yes Nick, I think what you have suggested probably is the best way to handle this, that is, to simply explain the relationships effectively on a subset by subset basis (ie. "better horses" as against the "rest") and I must admit that I have adopted this approach myself in the past. However I have always been worried that there might have been some more elegant statistical way of addressing this question that I was just unaware of. – Denis W Apr 08 '18 at 12:09

-

As before I have to confess complete ignorance of how horses and riders are judged. I am familiar only with grading schemes in which (a) a judge can give whatever grade or mark seems merited (out of a maximum) (B) marks are independent, so that there is no quota or curve to be followed. I'd expect that principal component analysis or correspondence analysis might be helpful to you. Are there example datasets you can post? – Nick Cox Apr 08 '18 at 12:31

-

Can appreciate the need for a clearer statement of my problem. Each horse is judged by 2 judges who each give a percentage mark. The overall score (hence the placing) for each horse is the average of those 2 scores. If the judges were of equal ability their scores for each horse should be similar. Certainly they should have ranked all the horses in the same order. However, judges do differ in ability. An equestrian official asks me to provide a statistic which clearly shows just how well 2 judges are aligned in term of their scores. – Denis W Apr 09 '18 at 00:47

-

Judges scores for 19 horses: (70.1,73.0) (67.4,75.1) (68.0,73.9) (68.3,73.0) (66.4,74.3) (69.1,71.4) (71.6,69.8) (70.1,67.6) (67.1,69.0) (64.6,69.9) (57.7,69.1) (61.3,64.1) (64.7,58.6) (60.7,58.6) (60.7,58.6) (55.4,63.1) (55.3,55.7) (54.6,55.6) (47.4,50.6) – Denis W Apr 09 '18 at 00:47

-

My problem: If I calculate a correlation coefficient for the judges' scores over all 19 horses R=0.811 which would signify quite a good degree of alignment between these two judges. However, they are actually negatively aligned on the top 8 horses R= -0.766. They are also not well aligned on the remaining 11 horses R=0.680 How do I answer the equestrian official? Are the judges well aligned or not? – Denis W Apr 09 '18 at 00:48

-

I've tweaked title and tags to flag the wider question. Nothing here of statistical interest is unique to equestrianism. – Nick Cox Apr 09 '18 at 10:33

-

NB Your subsetting procedure should be expected to lead to smaller correlation coefficients' being calculated for each subset - see e.g. https://stats.stackexchange.com/a/13317/17230. – Scortchi - Reinstate Monica Apr 09 '18 at 11:17

1 Answers

Thanks for the explanation of judging and the data example, which make the question helpfully concrete. I will label your judges A and B

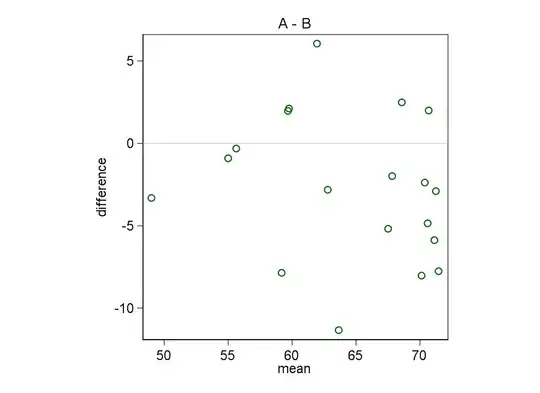

- A helpful plot is difference A $-$ B versus mean (A + B)/2. In biostatistics especially this plot is often called the Bland-Altman plot after statisticians Martin Bland (1947- ) and Douglas Altman (1948- ). It has a longer history and was, for example, often mentioned by John W. Tukey (1915--2000) as a simple device. A natural reference line is A $-$ B = 0 corresponding to agreement A = B. Here is one such for your sample data.

I don't think it helps much to look for clusters in this plot or the more obvious scatter plot A versus B unless they really jump out at you. The risk of over-interpreting small clusters is probably greater than that of missing genuine fine structure.

A measure of agreement (rather than correlation) is concordance correlation, often attributed to Lin, who has made most of it, but explicit or implicit in earlier work by others. Here are some results from a Stata implementation for your data:

Concordance correlation coefficient (Lin, 1989, 2000): rho_c SE(rho_c) Obs [ 95% CI ] P CI type 0.749 0.098 19 0.558 0.940 0.000 asymptotic 0.489 0.887 0.000 z-transform Pearson's r = 0.811 Pr(r = 0) = 0.000 C_b = rho_c/r = 0.923 Reduced major axis: Slope = 0.880 Intercept = 5.215 Difference = A - B Difference 95% Limits Of Agreement Average Std Dev. (Bland & Altman, 1986) -2.658 4.433 -11.347 6.031 Correlation between difference and mean = -0.213 Bradley-Blackwood F = 3.783 (P = 0.04374)

The concordance correlation is pulled below the Pearson correlation by any systematic differences between judges. Although just a single-number summary the concordance correlation is focused on measuring agreement, not correlation!

A paper of mine details here surveying this territory may be accessible to you. The examples from a different field should not be too distractingly alien: the principles here are generic.

Detail: The plot here uses jittering to shake identical points apart.

- 48,377

- 8

- 110

- 156

-

This is extremely useful Nick so I thank you for your assistance. I have already started to do some research on the use and calculation of Lin's coefficient and the Kendall W coefficient for non-parametric data. This is clearly the approach I need to take to address the problem at hand. – Denis W Apr 09 '18 at 10:44