I have a particular problem where I am using Bayesian techniques to estimate parameters of a distribution of a random variable.

I would like to use an external source of data to determine an appropriate prior distribution for the analysis (which will then be updated with internal data). Let's denote this external data as

$$\boldsymbol{X}=\{X_{1},X_{2},\ldots,X_{n}\}$$

Now, ideally I would like to use this data to determine the prior distribution of an underlying parameter vector

$$\boldsymbol{\Theta}=\{\theta_{1},\theta_{2}\}$$

My current approach is:

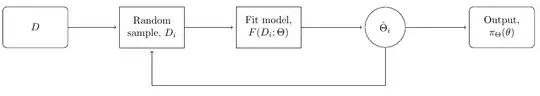

For $m=\{1,\ldots,M\}$ iterations:

- Take a random subset of $\boldsymbol{X}$, denote this as $\boldsymbol{X}_{m}$.

- Estimate the parameters using $\boldsymbol{X}_{m}$, giving $\hat{\boldsymbol{\Theta}}_{m}$.

The above process provides $M$ estimates of $\boldsymbol{\Theta}$ and provides a prior distribution for our analysis.

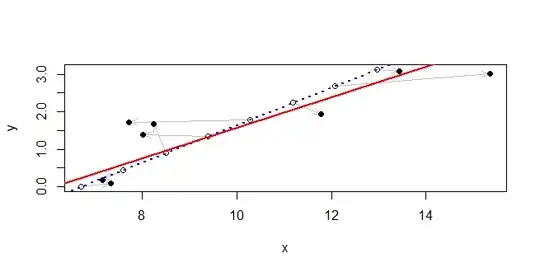

I feel this a reasonable approach as it should be quite robust as we have randomly sampled from our original data each time we estimate. Obviously, the analysis will depend on the size of the subsample. I view this as some sort of cross-validation implicit in the estimation.

Is the above a reasonable approach to determining a prior distribution from an external source of data?

Additional Essentially, I want the external data to construct prior distributions for each of the parameters. The external data contains information that the internal data is lacking and therefore would like the priors to be a starting point for the Bayesian analysis which the internal estimates will be based on.

Just for clarification, the model had been set up as follows: