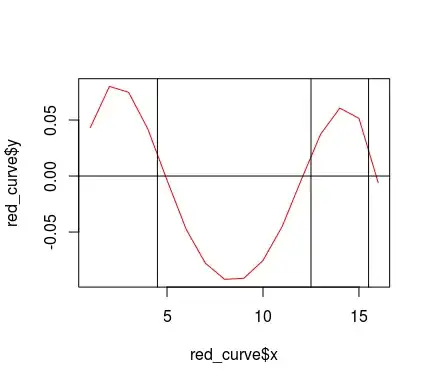

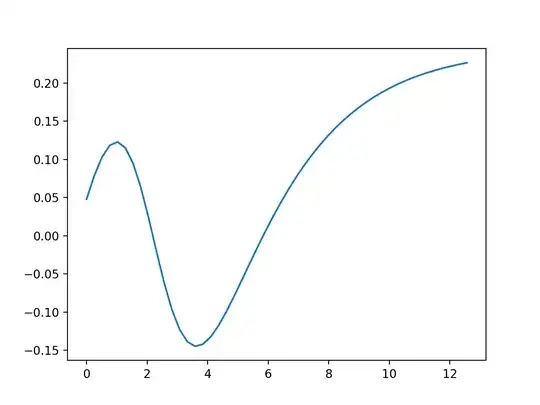

I wrote a simple RNN program to create function which fits y = sin(x). I trained the network by giving 0 < x < 2*pi and y = sin(x) data. And then I checked the wave generated by the trained network. Seeing what wave trained network generate, while x is 0 < x < 2*pi, the shape of the wave seems like sin wave, but if x is x > 2*pi, the wave doesn't looks sin wave, it's like tanh wave. I expected simple RNN program like this has an ability to create sin wave of infinite length, but I couldn't make it. Is there any way to make simple RNN program fits sin wave of infinite length? Any help would be appreciated.

Overview of the program:

Simple RNN program:

import tensorflow as tf

import numpy as np

import random

from matplotlib import pyplot as plt

# Create graph

# inputs

x = tf.placeholder(tf.float32, [])

t = tf.placeholder(tf.float32, [])

y_prev = tf.Variable(0.0)

# weights

ws = [tf.Variable(random.random()*2 - 1) for _ in range(6)]

# Here is the trained weights

# ws = [tf.Variable(w) for w in (-0.20203453, -0.9639475, -0.66465586, 0.5334513, -1.0572276, 0.55196327)]

# biases

bs = [tf.Variable(random.random()*2 - 1) for _ in range(3)]

# Here is the trained biases

# bs = [tf.Variable(b) for b in (0.17080691, 1.4228324, -0.26205453)]

# Calc outputs of hidden 1,2 neuron

h1 = tf.tanh(ws[0]*x + ws[1]*y_prev + bs[0])

h2 = tf.tanh(ws[2]*x + ws[3]*y_prev + bs[1])

# Calc y

y = ws[4]*h1 + ws[5]*h2 + bs[2]

# Calc loss

loss = tf.square(t - y)

# Define Update y_prev operation

update_val = tf.placeholder(tf.float32, [])

update_y_prev_op = tf.assign(y_prev, y)

# Define Initialize y_prev operation

init_y_prev_op = tf.assign(y_prev, 0.0)

train_step = tf.train.GradientDescentOptimizer(0.03).minimize(loss, var_list=ws+bs)

initializer = tf.global_variables_initializer()

sess = tf.Session()

sess.run(initializer)

train_x_lst = np.linspace(0, 2*np.pi, 50)

train_y_lst = np.sin(train_x_lst)

sess.run(init_y_prev_op)

for epoch_n in range(100):

for x_value, y_value in zip(train_x_lst, train_y_lst):

sess.run(train_step, feed_dict={x:x_value, t:y_value})

sess.run(update_y_prev_op, feed_dict={x:x_value})

sess.run(init_y_prev_op)

# validation

predict_x = np.linspace(0,2*np.pi,50)

predict_y = []

sess.run(init_y_prev_op)

for x_value in predict_x:

predict_y.append(sess.run(y, feed_dict={x:x_value}))

#plt.plot(train_x_lst, train_y_lst)

plt.plot(predict_x, predict_y)

plt.show()

print("ws:", [sess.run(var) for var in ws])

print("bs:", [sess.run(var) for var in bs])

sess.close()

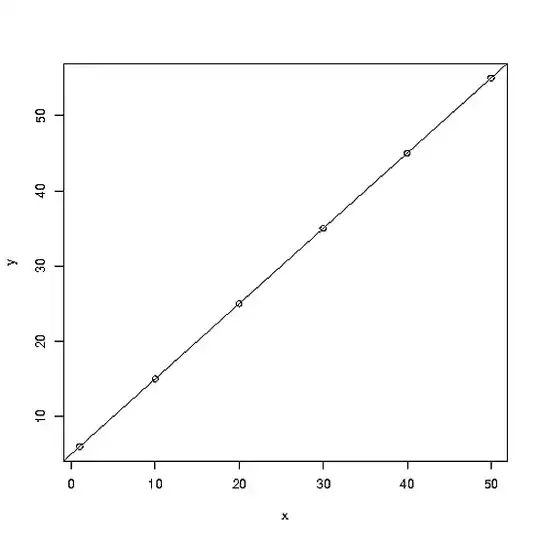

Trained RNN prediction(0 < x < 2*pi):