I've been working on a regression problem where the input is an image, and the label is a continuous value between 80 and 350. The images are of some chemicals after a reaction takes place. The color that turns out indicates the concentration of another chemical that's left over, and that's what the model is to output - the concentration of that chemical. The images can be rotated, flipped, mirrored, and the expected output should still be the same. This sort of analysis is done in real labs (very specialized machines output the concentration of the chemicals using color analysis just like I'm training this model to do).

So far I've only experimented with models roughly based off VGG (multiple sequences of conv-conv-conv-pool blocks). Before experimenting with more recent architectures (Inception, ResNets, etc.), I thought I'd research if there are other architectures more commonly used for regression using images.

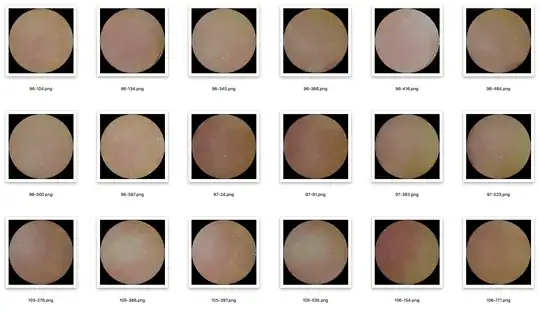

The dataset looks like this:

The dataset contains about 5,000 250x250 samples, which I've resized to 64x64 so training is easier. Once I find a promising architecture, I'll experiment with larger resolution images.

So far, my best models have a mean squared error on both training and validation sets of about 0.3, which is far from acceptable in my use case.

My best model so far looks like this:

// pseudo code

x = conv2d(x, filters=32, kernel=[3,3])->batch_norm()->relu()

x = conv2d(x, filters=32, kernel=[3,3])->batch_norm()->relu()

x = conv2d(x, filters=32, kernel=[3,3])->batch_norm()->relu()

x = maxpool(x, size=[2,2], stride=[2,2])

x = conv2d(x, filters=64, kernel=[3,3])->batch_norm()->relu()

x = conv2d(x, filters=64, kernel=[3,3])->batch_norm()->relu()

x = conv2d(x, filters=64, kernel=[3,3])->batch_norm()->relu()

x = maxpool(x, size=[2,2], stride=[2,2])

x = conv2d(x, filters=128, kernel=[3,3])->batch_norm()->relu()

x = conv2d(x, filters=128, kernel=[3,3])->batch_norm()->relu()

x = conv2d(x, filters=128, kernel=[3,3])->batch_norm()->relu()

x = maxpool(x, size=[2,2], stride=[2,2])

x = dropout()->conv2d(x, filters=128, kernel=[1, 1])->batch_norm()->relu()

x = dropout()->conv2d(x, filters=32, kernel=[1, 1])->batch_norm()->relu()

y = dense(x, units=1)

// loss = mean_squared_error(y, labels)

Question

What is an appropriate architecture for regression output from an image input?

Edit

I've rephrased my explanation and removed mentions of accuracy.

Edit 2

I've restructured my question so hopefully it's clear what I'm after