I want to get a bit familiar with diagnostic plots for count data. I generated these two plots but I don't understand what they should tell me. Maybe someone can sum up few important things?

Asked

Active

Viewed 662 times

1

-

Related: [Diagnostic plots for count regression](https://stats.stackexchange.com/q/70558/). – gung - Reinstate Monica Mar 12 '18 at 13:49

-

2I think it would be more common to put the fitted values on the x-axis for your 2nd plot. – gung - Reinstate Monica Mar 12 '18 at 13:50

-

What are "breaks in data"? – AdamO Mar 12 '18 at 13:51

-

sry , breaks is the count variable in the data set. It is the response variable, nummber of breaks. – Dima Ku Mar 12 '18 at 13:58

-

i allready read the thread but it doesnt rly helped me , because he only says : ,,Here we see some very large residuals and a substantial deviance of the deviance residuals from the normal''. ( for plot deviance residuval vs fitted values) – Dima Ku Mar 12 '18 at 14:01

1 Answers

1

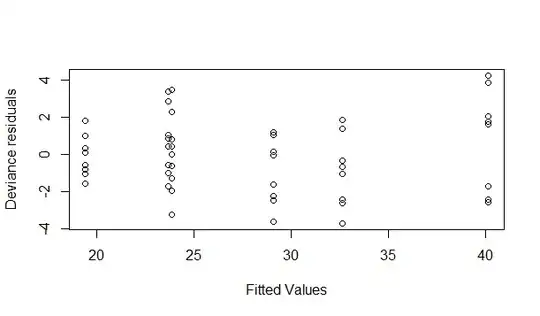

Deviance residual (DR) plot:

- Are the DRs about mean centered?

- Is the variance about the same across the range of fitted values?

- Is the net spread of the DRs about distributed according to a standard normal distribution (if they are spread otherwise, there may be over/under dispersion)

- Is there heaping of 0-inflated at the lowest DR (you may need to use "jitter" to assess that one in a scatter plot).

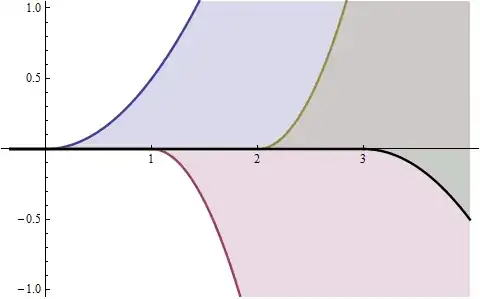

Predicted vs observed plot:

- Does a logarithmic curve seem to interpolate the mean of the values at each level?

- Is the variance of the spread about the mean equal to that mean? (As @gung noted, you have transposed the plot from the typical way we usually inspect it).

Most of the inspections are assessing the same essential qualities of the model, the DR is most precise in terms of how to organize such results, but the predicted vs. observed can tell you more about how those values (say, outliers or heteroscedasticity) affect the model. Most of these "checks" seem to hold with the plots you generated by my eye. When these hold, a Poisson distribution shares many characteristics with the data you are modeling, so inference, predictions, and simulations based on that probability model should be reasonable.

AdamO

- 52,330

- 5

- 104

- 209

-

-

@DimaKu as I mentioned it is in the training of the eye. You can use graphical methods in R or actually print the plot out and draw lines. It is subjective. Diagnostics *benefit* from impreciseness because mild/non-noticeable departures from model assumptions result in mild/inconsequential problems with inference, and the tendency for computational tests of assumptions is to be too precise. – AdamO Mar 12 '18 at 15:49