I'm really sorry if this question is too basic, but I've been looking for a while and haven't been able to find a convincing response. My statistics background is rather poor.

Geometric distribution is defined as the probability distribution of the number X of Bernoulli trials needed to get one success (or the number of failures before the first success,) given a constant success probability $p$ for every trial.

Now suppose that $p_i$ is an iid random variable, and a different realization of it for every single Bernoulli trial (as opposed to one for each Geometric or a constant value,) following a continuous distribution defined in the interval $[0,1]$ with $E[p_i]=\bar{p}$ known.

My main question is: Is X still distributed Geometric? If so, is it right to say $X\sim Geom(\bar{p})$?

My intuition is that the problem can be reduced to the simpler (i.e. constant $p$) problem since if $Y\sim Bernoulli(p_i)$, then $E(Y)=E(E(Y|p_i))=E(p_i)=\bar{p}$ by the law of total expectation. From here I could show by induction that $P(X=k)=P(Z=k)$ for every $k\in\mathbb{N}$ and $Z\sim Geom(\bar{p})$. But my probability skills won't let me confirm or reject this. Is this reasoning right?

A complementary question is whether this is a well-known problem (or the solution uses a well-known theorem,) with a name. I suspect the answer may sound obvious to someone with a better statistics background than me.

At the end of this post, I add sample code I used to simulate the problem. If X is not Geometric, a final question would be: what is going on with my simulations that can't rule it out? Have I faced a special case?

There is a related question here, but the $p_i$ are deterministic, making it a different problem, I think.

Thanks in advance

Update

Thanks to whuber's comment and Glen_b's question, I realize I might have explained myself a bit ambiguously.

What I'm saying is the $p_i$ are redrawn for each independent Bernoulli trial, not just for each realization of the Geometric variable (which could imply several trials with the same success probability.)

The Beta-binomial distribution Wikipedia article suggests (although the language is ambiguous to me,) that the success probability is the same for all the n trials. A mixture of geometric distributions would also suggest a consistent success probability from the first trial to the first success.

I also run simulations. I know this does not prove anything, but for what it's worth, I report them here:

In pseudo-code:

Let $p_i\sim G$

//some distributionLet $B_i\sim Bernoulli(p_i)$

//a new p_i for every B_imy_clumsy_generator:

$n\leftarrow0$

do:

$t\leftarrow$drawn from $B_i$

$n\leftarrow n+1$

while $t\neq1$

//Is n distributed Geometric?

Sample Wolfram Mathematica code:

f[] := RandomVariate[BetaDistribution[3, 2]]

g[] := With[{},

n = 0;

While[RandomVariate[BernoulliDistribution[f[]]] == 0, n = n + 1];

n];

ress = Table[g[], {i, 1000000}];

pp = p /. FindDistributionParameters[ress, GeometricDistribution[p]]

DistributionFitTest[ress, GeometricDistribution[pp]]

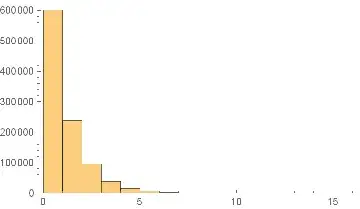

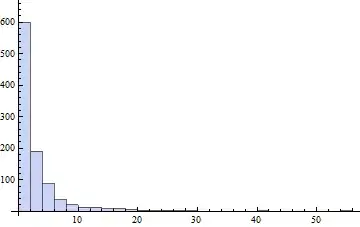

An example run of this code gave pp=0.600311 and a p-value of 0.906769. Below is a resulting histogram for 1,000,000 values:

- I have tried a number of distributions (categorical, uniform, with different parameters) besides the beta shown

- $pp$ turns out to be consistently close to

Mean[pp]and the p-value of the distribution fit test is always high, even at n=1,000,000 (I haven't rejected a single null hypothesis at 95% confidence)