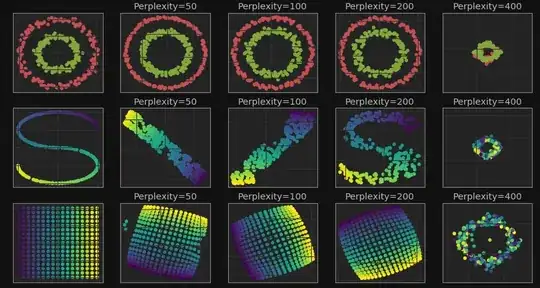

One cannot have perplexity values larger than sample size. [I don't have time right now, but I will try to provide a brief mathematical explanation of this later.]

A popular t-SNE tutorial https://distill.pub/2016/misread-tsne/ says

The image for perplexity 100, with merged clusters, illustrates a pitfall: for the algorithm to operate properly, the perplexity really should be smaller than the number of points. Implementations can give unexpected behavior otherwise.

As explained by Laurens van der Maaten, https://github.com/distillpub/post--misread-tsne/issues/2:

I just had one small remark: you show some results with perplexity 100 that are a complete mess. This mess is likely not due to a property of the algorithm, but due to the implementation you used not catching invalid parameter inputs. Note that the perplexity of a distribution over N items can never be higher then N (in this case, the distribution is uniform). For t-SNE this means you need at least 101 points to be able to use perplexity 100. If you use a perplexity setting that is too high for the number of points (and have no assertion checking for that), the binary search for the right bandwidth will fail and the algorithm produces garbage (depending on the exact implementation).

By the way, I don't trust scikit-learn implementation of t-SNE. I had various problems with it, and I prefer to use Laurens van der Maaten's C++ library (you can find Python wrappers).