I'm going through a Computer Architecture course and in one of the lectures they mention that when measuring the difference in runtime performance between two systems, using for example multiple applications as measurements, it's fine if we take the mean of the runtimes for the applications, but not to take the mean of the speedups.

Speedup is a ratio defined as time_A/time_B.

Instead they recommend using the geometric mean to aggregate the speedups.

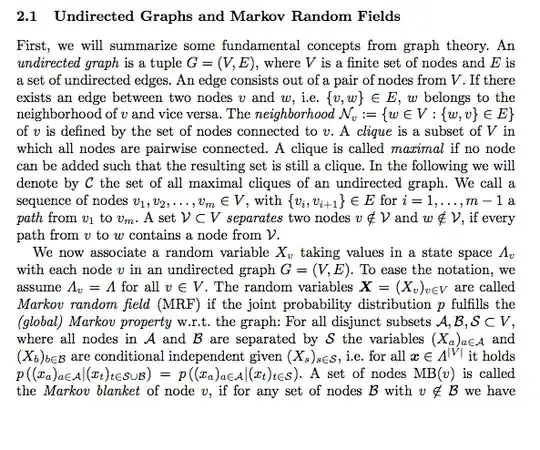

Here's the example from the video:

Is there a general rule for not taking the mean of ratio measurements? What is the motivation?