Assume $m$ independent binary classifiers with probability $p$ to be correct $p>0.5$. Show that the probability of a voting, e.g. decision is made by the majority of classifiers is correct with probability $q>p$.

I think this problem as equivalent to showing that the Binomial cumulative distribution $\text{CDF}(k,n,p)$ follows $\text{CDF}(k=\frac{m}{2},m,p)<1−p$.

Tried to use Markov's inequality but failed. After some numerical experimenting, I first found out this work only for odd $m$. For example, if $m = 4$ and $p = .6$ voting will have a worse performance ($q < p$) ($q = 0.4752 < .6$). For odd $m$ I verified numerically the claim is correct but find it hard to prove.

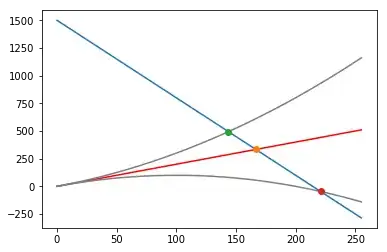

The challenge is to show a bound on the Binomial distribution $\text{CDF}$ which is tight enough to be compared with $p$. When I tried the Markov inequality, $P(x > a) < \dfrac{E(x)}{a}$, I get a bound which is not tight enough. This is demonstrated in the figure below:

Code which created this figure in Python:

Code which created this figure in Python:

p = np.linspace(0.5,1, 100);

n = 5

p_error = binom.cdf(k=n/2, p=p, n=n)

hoeffding = np.exp(-2*(n*p-n/2)**2/n)

chernoff = np.exp(-2*(n*p-n/2)**2/n/p)

def bernoli_dkl(a,p):

return a*np.log(a/p) + (1-a)*np.log((1-a)/(1-p))

dkl = np.exp(-n*bernoli_dkl(1/2,p))

plt.figure(figsize=(3,3), dpi=150)

plt.plot(p, 1-p_error, label='correct')

plt.plot(p, 1-hoeffding, label='hoeffding')

plt.plot(p, 1-chernoff, label='chernoff')

plt.plot(p, 1-dkl, label='dkl')

plt.plot(p, p, label='p')

plt.ylabel('$p_{correct}$')

plt.xlabel('$p$')

plt.title('$n={}$'.format(n))

plt.legend()

Where the bounds where taken from Wikipedia: https://en.wikipedia.org/wiki/Binomial_distribution#Tail_bounds