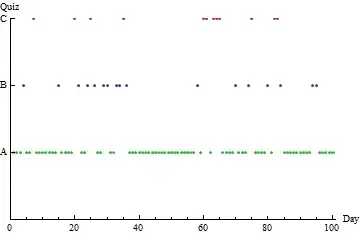

I am looking at a panel data with binary outcomes in each year. The ultimate use of the model I build is for prediction. The cross-sections are quite tall (~100,000 non-cases and ~5,000-20,000 cases) but the number of years is few. I suspect that there may be both a time-varying intercept and one time-varying coefficient. Thus, I am using lme4::glmer. My questions are as follows

- Can I estimate the three co-variance parameters despite a low number years (levels of the random effect factor). Particularly, does it matter that I have tall cross sections (large number of repeated measures)?

- Should I suspect that the results are unreliable? Are there general guidelines for the number of needed levels to estimate co-variance parameters (references would be neat)? Again, does it matter that I have tall cross sections (large number of repeated measures)?

Here is an example to make it more clear

#####

# simulate data

set.seed(71005391)

n_lvls <- 16 # few number of year levels

n_per_lvl <- 1e4 # tall cross section

n <- n_lvls * n_per_lvl

# simulate covariates and random effects

X <- matrix(rnorm(n * 2), n, 2)

year <- as.integer(gl(n_lvls, n_per_lvl))

Q <- matrix(c(.3, .133, .133, .2), 2)

ranefs <- matrix(rnorm(n_lvls * 2), ncol = 2) %*% chol(Q)

# compute linear predictor and simulate outcome

lps <-

# fixed effects

-1 + X[, 1] + X[, 2] +

# random effects

ranefs[year, 1] + ranefs[year, 2] * X[, 2]

df <- data.frame(Y = 1 / (1 + exp(-lps)) > runif(n), X, year = year)

#####

# fit model

library(lme4)

fit <- glmer(Y ~ X1 + X2 + (X2 | year), data = df, family = binomial())

# show estimates

list(est = VarCorr(fit), actual_var = Q, actual_cor = cov2cor(Q))

#R> $est

#R> Groups Name Std.Dev. Corr

#R> year (Intercept) 0.43682

#R> X2 0.43690 0.709

#R>

#R> $actual_var

#R> [,1] [,2]

#R> [1,] 0.300 0.133

#R> [2,] 0.133 0.200

#R>

#R> $actual_cor

#R> [,1] [,2]

#R> [1,] 1.0000000 0.5429702

#R> [2,] 0.5429702 1.0000000

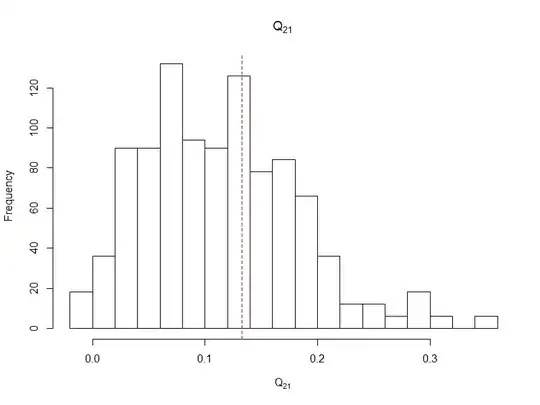

Here are confidence intervals using likelihood profiles for the variance parameters

conf. <- confint.merMod(

fit, method = "profile", quiet = FALSE, oldNames = FALSE,

parm = "theta_", parallel = "snow", ncpus = 7, verbose = TRUE)

#R> Computing profile confidence intervals ...

#R> Warning messages:

#R> 1: In if (parm == "theta_") { :

#R> the condition has length > 1 and only the first element will be used

#R> 2: In if (parm == "beta_") { :

#R> the condition has length > 1 and only the first element will be used

conf.

#R> 2.5 % 97.5 %

#R> sd_(Intercept)|year 0.3195899 0.6469012

#R> cor_X2.(Intercept)|year 0.3642638 0.8832762

#R> sd_X2|year 0.3194962 0.6472190

My intuition is that we would need a lot of levels to estimate the co-variance parameters. E.g., as we need a lot of observation in a pure fixed effect model to estimate the coefficients. 3 random effects relative to the 16 levels as in the above does seem quite high. However, I gather that the large amount of information from the cross section may have an effect?