I am not sure about what I understand from the official documentation, which says:

Returns: A pair (outputs, state) where:

outputs: The RNN output Tensor.If

time_major == False(default), this will be a Tensor shaped:[batch_size, max_time, cell.output_size].If

time_major == True, this will be a Tensor shaped:[max_time, batch_size, cell.output_size].Note, if

cell.output_sizeis a (possibly nested) tuple of integers or TensorShape objects, then outputs will be a tuple having the same structure as cell.output_size, containing Tensors having shapes corresponding to the shape data incell.output_size.

state: The final state. If cell.state_size is an int, this will be shaped[batch_size, cell.state_size]. If it is a TensorShape, this will be shaped[batch_size] + cell.state_size. If it is a (possibly nested) tuple of ints or TensorShape, this will be a tuple having the corresponding shapes. If cells are LSTMCells state will be a tuple containing a LSTMStateTuple for each cell.

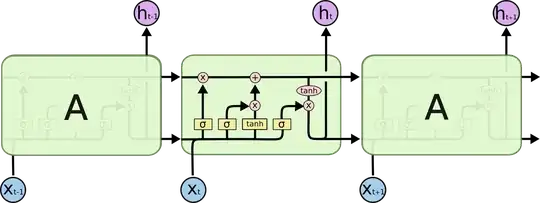

Is output[-1] always (in all three cell types i.e. RNN, GRU, LSTM) equal to state (second element of return tuple)? I guess the literature everywhere is too liberal in the use of the term hidden state. Is hidden state in all three cells the score coming out (why it is called hidden is beyond me, it would appear cell state in LSTM should be called the hidden state as it is not exposed)?