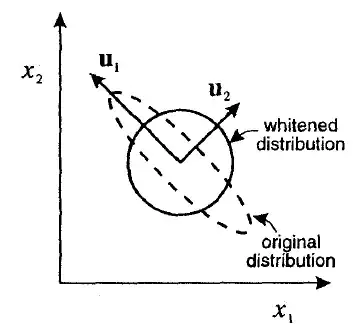

Given some whitening transform, we change some vectors $\textbf{x}$, where features are correlated, into some vector $\textbf{y}$, where components are uncorrelated. Then we run some learning algorithm on the transformed vectors $y$.

Why does this work? In the original space we had correlation between vector components, and it carried some information (correlation IS information, right?). Now, we whiten data and get a round blob as output. All information about correlation lost - to me seems like a large part of information lost. Wouldn't it be much easier to learn decision boundaries/distributions defined over correlated data (since ALL information present)? So, how does SVM (since in my practice this is the method that requires this most), for example, gives a better result with whitening?