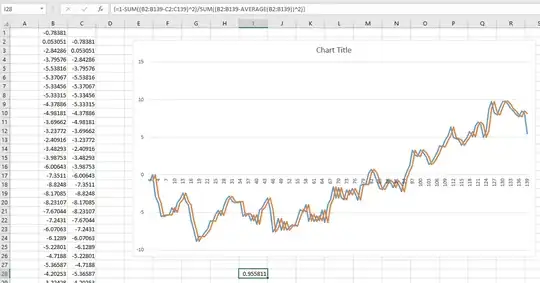

I recently came across a fascinating article about predicting future stock market returns. The author presents the graph below and cites an R^2 of 0.913. This would make the author's method far superior to anything I have ever seen on the subject (most argue that the stock market is unpredictable).

The author describes his method in great detail and provides substantial theory to back up the results. Then I read a second, critiquing article that referenced this paper: The Myth of Long-Horizon Predictability. Apparently people have been falling for this illusion for decades. Unfortunately, I don't really understand the paper.

This leads me to the following questions:

- Does the false confidence of long-term predictions arise due to using the same data set for both training and model validation? Would the problem go away if training and validation data were pulled from separate, non-overlapping time periods?

- Aside from validating on the training set, why does this problem become more pronounced over longer horizons?

- In general, how can I overcome this problem when training models that must make long-term predictions?