This bears some explaining:

I have a set of data from a psychophysics experiment where participants selected a response from a discrete set of 8 possible responses. These responses were actually colours, but they are equivalent to angles (taken from a circle in colour space), so the response set is effectively:

{pi/4, pi/2, 3*pi/4, pi, 5*pi/4, 3*pi/2, 7*pi/4, 2*pi}

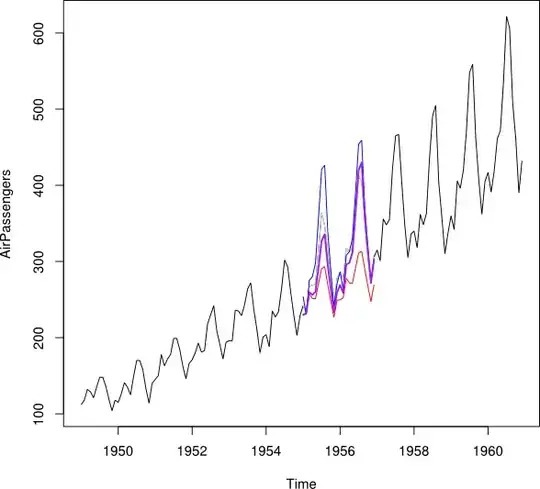

In my field (visual working memory), there are several competing models to fit behavioural data like mine. However, most other tasks use a continuous report scheme where they allow for 360 unique colours (angles) to be selected. There is a common toolbox to test the fit of such models, but it seems to only be effective for continuous data. For example, when I try to fit a simple von Mises I get results like this:

It seems to me that this fit doesn't capture the distribution (variance/width) of my data. Am I doing something fundamentally wrong by trying to fit this distribution? I'm not sure how to compare model performance on my data if these fitting methods only work for continuous values.

Somebody made an offhand comment recently that I could "try adding x degrees of Gaussian noise" to improve the fit. Is this a valid strategy? I have a weak statistical background (this is an undergraduate research project), and I'm not sure what to do.