I am just studying the basics of machine learning and had a question about the standardisation and normalisation of the features and its effectiveness.

I have read this CrossValidated question and this blog among many other articles but still was not clear on when I should apply which technique.

And to make things worse, in sklearn there is a StandardScaler, a MinMaxScaler, a MaxAbsScaler, RobustScaler, and Normalization.

So, I took the SECOM Manufacturing dataset and decided to run almost all the classification algorithms I could find with the data without scaling and by applying all these scaling methodologies and calculated the test accuracy.

What I got was not in line with most of the things I had read online.

For e.g.

Methods using Euclidean distance was supposed to be the most affected by scaling, but in fact, I saw the accuracy decrease when I scaled in the KNN algorithm.

Logistic Regression and SVM were algorithms which used Gradient Descent and they were also supposed to show an improvement, but could not really see that also, especially in SVM (or SVC, the classification variant)

Most of the algorithms shows RobustScaler to have lower accuracy and Normalization to have a higher accuracy.

Please below the results of the experiment I did. Can you please tell me if I am doing something wrong or interpreting this wrong?

Code I used

def benchmark_ml_algo_v2(df_features, df_target):

#Machine Learning Algorithm (MLA) Selection and initialization

MLA = [

#Ensemble Methods

AdaBoostClassifier(),

BaggingClassifier(),

ExtraTreesClassifier(),

GradientBoostingClassifier(),

RandomForestClassifier(n_estimators = 100),

xgb.XGBClassifier(),

lgb.LGBMClassifier(),

# CatBoostClassifier(),

#Gaussian Processes

GaussianProcessClassifier(),

#GLM

LogisticRegressionCV(),

PassiveAggressiveClassifier(max_iter=1000),

RidgeClassifierCV(),

SGDClassifier(max_iter= 1000),

Perceptron(max_iter=1000),

#Navies Bayes

GaussianNB(),

#Nearest Neighbor

KNeighborsClassifier(n_neighbors = 3),

#SVM

SVC(probability=True),

LinearSVC(),

#Trees

DecisionTreeClassifier(),

ExtraTreeClassifier(),

]

scalers = [

None,

MinMaxScaler(),

MaxAbsScaler(),

RobustScaler(),

Normalizer()

]

import time

#create table to compare MLA

MLA_columns = ['MLA Name','Scaler', 'MLA Test Accuracy','MLA Time']

MLA_compare = pd.DataFrame(columns = MLA_columns)

train_x, test_x, train_y, test_y = train_test_split(df_features, df_target,random_state = 40)

#index through MLA and save performance to table

row_index = 0

for alg in MLA:

print ("Running {}...".format(alg.__class__.__name__))

for scaler in scalers:

#set name and parameters

MLA_compare.loc[row_index, 'MLA Name'] = alg.__class__.__name__

MLA_compare.loc[row_index, 'Scaler'] = scaler.__class__.__name__

start = time.clock()

#initialize scaler

if scaler:

#score model with cross validation: http://scikit-learn.org/stable/modules/generated/sklearn.model_selection.cross_validate.html#sklearn.model_selection.cross_validate

alg.fit(X=scaler.fit_transform(train_x), y=train_y.values.ravel())

else:

#score model with cross validation: http://scikit-learn.org/stable/modules/generated/sklearn.model_selection.cross_validate.html#sklearn.model_selection.cross_validate

alg.fit(X=train_x, y=train_y.values.ravel())

MLA_compare.loc[row_index, 'MLA Time'] = time.clock() - start

if scaler:

MLA_compare.loc[row_index, 'MLA Test Accuracy'] = alg.score(X=scaler.fit_transform(test_x), y=test_y)

else:

MLA_compare.loc[row_index, 'MLA Test Accuracy'] = alg.score(X=test_x, y=test_y)

row_index+=1

#print and sort table: https://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.sort_values.html

return MLA_compare.sort_values(by = ['MLA Test Accuracy'], ascending = False)

#print(MLA_compare)

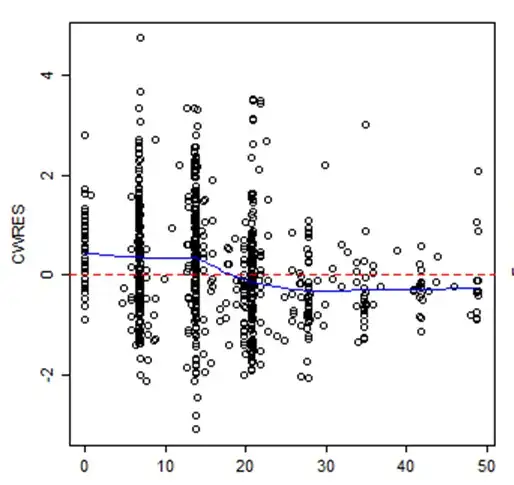

Edit: 15, Jan, 2018

On request, I re-did the experiment with 10 tries and 10 random splits and took the mean accuracy for the comparison. Below is the result.