Apart from RNNs, what other approaches are there when it comes to abstractive text summarization?

There are many other systems, I copy below some references to them:

From {1}:

Unfortunately, abstractive summarization is

known to be difficult. Existing work in abstractive

summarization has been quite limited and can be

categorized into two categories: (1) approaches using

prior knowledge (Radev and McKeown, 1998)

(Finley and Harabagiu, 2002) (DeJong, 1982) and

(2) approaches using Natural Language Generation

(NLG) systems (Saggion and Lapalme, 2002)

(Jing and McKeown, 2000). The first line of work

requires considerable amount of manual effort to

define schemas such as frames and templates that

can be filled with the use of information extraction

techniques. These systems were mainly used to

summarize news articles. The second category of

work uses deeper NLP analysis with special techniques

for text regeneration. Both approaches either

heavily rely on manual effort or are domain

dependent.

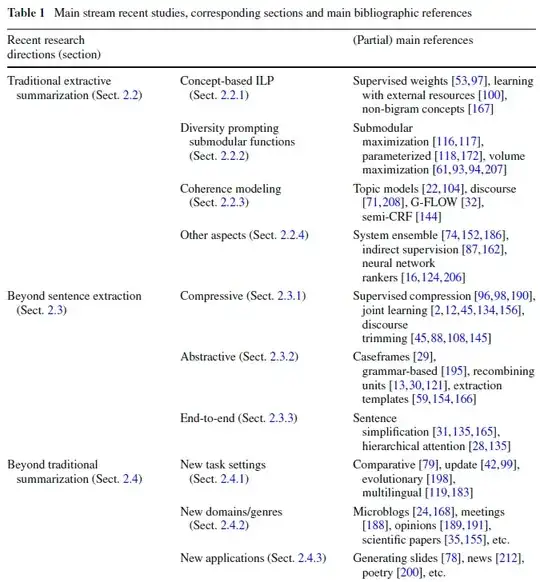

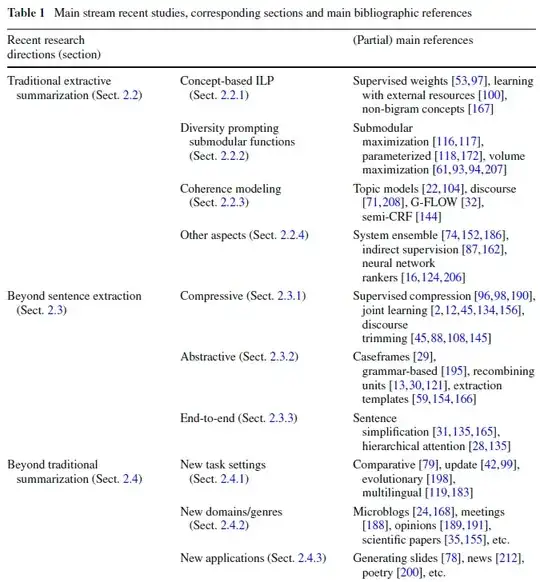

You may also want to have at section 2.3.2 Toward full abstraction and table 1 from {2}:

2.3.2 Toward full abstraction

Fully abstractive summarization attempts to understand the input and generate the summary

from scratch, usually including sentences or phrases that may not appear in the original document.

It actually involves multiple subproblems, each of its own can be made a relatively

independent research topic, including: simplification, paraphrasing, merging or fusion. Cheung

and Penn [29] conduct a series of studies comparing human-written model summaries to

system summaries at the semantic level of caseframes, which are shallow approximations of

the semantic role structure of a proposition-bearing unit like a verb and are derived from the

dependency parse of a sentence. They find that human summaries are: (1) more abstractive,

using more aggregation, (2) contain less caseframes and (3) cannot be reconstructed solely

from original documents but are able to if in-domain documents are added.

Due to the inherent difficulty and complexity of full abstraction, current research in abstractive

document summarizationmostly restricts in one or a fewof the subproblems. It is also less

active compared with compressive summarization, since merely considering compressions

has already boosted system performance, as discussed in the last section.9

Woodsend and Lapata [195] propose a model that allows paraphrases induced from a

quasi-synchronous tree substitution grammar (QTSG) to be selected in the final ILP model

covering content selection, surface realization, paraphrasing and stylistic conventions. For

document summarization that involves paraphrasing and fusing multiple sentences simultaneously,

other than grammar-based rewriting, one simpler more typical approach is to merge

information contained in sub-sentence-level units. For instance, one can cluster sentences,

build word graphs and generate (shortest) paths from each cluster to produce candidates for

making up a summary [6,51]. More sophisticated treatments can also be built on syntactic or

semantic analysis. One may build sentences via merging consistent noun phrases and verb

phrases [13] or linearizing graph-based semantic units derived from semantic formalisms

such as abstract meaning representation (AMR) [121].

There also exist psychologically motivated studies [48] trying to implement cognitive

human comprehension models based on propositions, which are elements extracted from an

original sentence, each containing one functor and several arguments. Propositions form a

tree where a proposition is attached to another proposition with which they share at least one

argument. Summaries are then generated from selected important propositions. Currently

the systems have mostly been evaluated on over-specific datasets and rely heavily on various

components including parsing, coreference resolution, distributional semantics, lexical

chains [49] and natural language generation from semantic graphs [50

In order to better guide alignment and merging processes, supervised learning-basedmethods

have been investigated [46,178]. A later work [30] expands the sentence fusion process

with external resources beyond the input sentences by combining the subtrees of many sentences,

allowing for relevant information from sentences that are not similar to the original

input sentences to be added during fusion.

Abstractive summarization has also been studied in information extraction (IE) perspective,

for example, IE-informed metrics have also been shown to be useful to rerank the output

of high performing baseline summarization systems [83]. In the context of guided summarization

where predefined categories and readers’ intent have been predefined, preliminary

full abstraction can be achieved by extracting templates using predefined rules for different

types of events [59,166].

In order to better guide alignment and merging processes, supervised learning-basedmethods

have been investigated [46,178]. A later work [30] expands the sentence fusion process

with external resources beyond the input sentences by combining the subtrees of many sentences,

allowing for relevant information from sentences that are not similar to the original

input sentences to be added during fusion.

Abstractive summarization has also been studied in information extraction (IE) perspective,

for example, IE-informed metrics have also been shown to be useful to rerank the output

of high performing baseline summarization systems [83]. In the context of guided summarization

where predefined categories and readers’ intent have been predefined, preliminary

full abstraction can be achieved by extracting templates using predefined rules for different

types of events [59,166].

References:

- {1} Ganesan, Kavita, ChengXiang Zhai, and Jiawei Han. "Opinosis: a graph-based approach to abstractive summarization of highly redundant opinions." In Proceedings of the 23rd international conference on computational linguistics, pp. 340-348. Association for Computational Linguistics, 2010.

Harvard ; http://www.anthology.aclweb.org/C/C10/C10-1039.pdf ; https://scholar.google.com/scholar?cluster=17683373799863050123&hl=en&as_sdt=0,5

- {2} Yao, Jin-ge, Xiaojun Wan, and Jianguo Xiao. "Recent advances in document summarization." Knowledge and Information Systems (2017): 1-40. https://scholar.google.com/scholar?cluster=16155547405797487545&hl=en&as_sdt=0,5