Suppose we have a set of points $\mathbf{y} = \{y_1, y_2, \ldots, y_N \}$. Each point $y_i$ is generated using distribution $$ p(y_i| x) = \frac12 \mathcal{N}(x, 1) + \frac12 \mathcal{N}(0, 10). $$ To obtain posterior for $x$ we write $$ p(x| \mathbf{y}) \propto p(\mathbf{y}| x) p(x) = p(x) \prod_{i = 1}^N p(y_i | x). $$ According to Minka's paper on Expectation Propagation we need $2^N$ calculations to obtain posterior $p(x| \mathbf{y})$ and, so, problem becomes intractable for large sample sizes $N$. However, I can't figure out why do we need such amount of calculations in this case, because for single $y_i$ likelihood has the form $$ p(y_i| x) = \frac{1}{2 \sqrt{2 \pi}} \left( \exp \left\{-\frac12 (y_i - x)^2\right\} + \frac{1}{\sqrt{10}} \exp \left\{-\frac1{20} y_i^2\right\} \right). $$

Using this formula we obtain posterior by simple multiplication of $p(y_i| x)$, so we need only $N$ operations, and, so we can solve this problem for large sample sizes exactly.

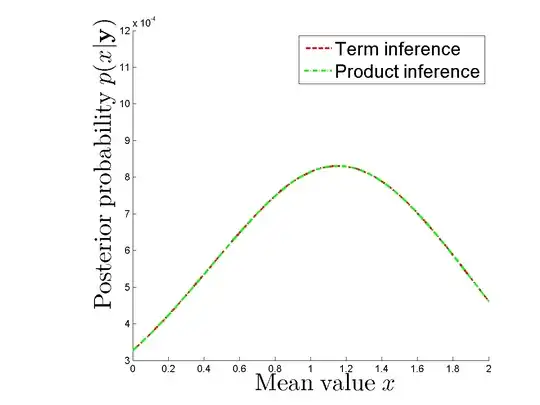

I make numerical experiment to compare do I really obtain the same posterior in case I calculate each term separately and in case I use product of densities for each $y_i$. Posteriors are same. See

Where am I wrong? Can anyone make it clear to me why do we need $2^N$ operations to calculate posterior for given $x$ and sample $\mathbf{y}$?

Where am I wrong? Can anyone make it clear to me why do we need $2^N$ operations to calculate posterior for given $x$ and sample $\mathbf{y}$?