Note: I edited this question on 1/1/2018 because of the comments on the original question. So some comments relate to the earlier version. It is closed as duplicaten but I dusagree with that

For a bi-variate normal distribution with mean $(\mu_X, \mu_Y)$, variances $\sigma_X^2, \sigma_Y^2$ and correlation $\rho$ it holds (see e.g. http://athenasc.com/Bivariate-Normal.pdf, https://math.stackexchange.com/questions/33993/bivariate-normal-conditional-variance) that

$$\Bbb E(Y\mid{X=x})=\mu_Y + \rho \frac{\sigma_Y}{\sigma_X}(x-\mu_X)$$

By symmetry I would say that (is this correct?)

$$\Bbb E(X\mid{Y=y})=\mu_X + \rho \frac{\sigma_X}{\sigma_Y}(y-\mu_Y)$$

Next question:

These are two lines in an $(x,y)$ plane.

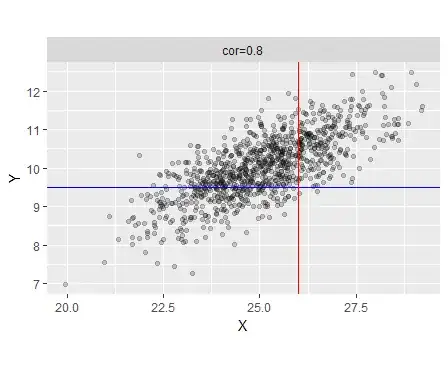

The picture below shows and example of a sample from such a bivariate normal distribution. Conditioning on $X$ means that I 'intersect' along the red lines, conditioning on $Y$ is 'intersecting' along the blue lines.

The contours of constant density for a bi-variate normal distribution are (rotated) ellipses.

Note that the rotation angle of the prinicipal axis wrt to the $x$-axis depends on the correlation $\rho$ (so, as opposed to what @whuber says in his comments below) correlation is the relevant topic here. Because, as argued by @MichaelHardy, there are values for $\rho$ where these lines correspond to these principal axis.

My question is whether the two lines of the conditional means correspond to (one of) the principal axes of these ellipses and if not how this can be explained geometrically.

Note: this question is not answered by the answer of @DilipSarwate here (and which I fully agree with) : Effect of switching response and explanatory variable in simple linear regression because he is using OLS (so regression techniques). The above formula are a theoretical property of the bivariate (multivariate) normal distribitions namely that all conditional distributions of it are also normal (see http://athenasc.com/Bivariate-Normal.pdf), there is no need for referring to regression of OLS to show that.