Your Option 1 may not be the best way to go; if you want to have multiple binary classifiers try a strategy called One-vs-All.

In One-vs-All you essentially have an expert binary classifier that is really good at recognizing one pattern from all the others, and the implementation strategy is typically cascaded. For example:

if classifierNone says is None: you are done

else:

if classifierThumbsUp says is ThumbsIp: you are done

else:

if classifierClenchedFist says is ClenchedFist: you are done

else:

it must be AllFingersExtended and thus you are done

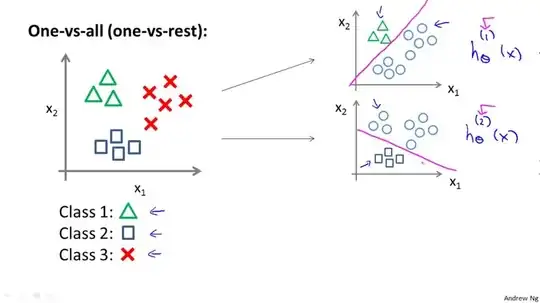

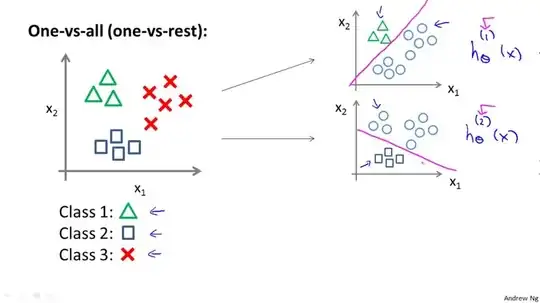

Here is a graphical explanation of One-vs-all from Andrew Ng's course:

Multi-class classifiers pros and cons:

Pros:

- Easy to use out of the box

- Great when you have really many classes

Cons:

- Usually slower than binary classifiers during training

- For high-dimensional problems they could really take a while to converge

Popular methods:

- Neural networks

- Tree-based algorithms

One-vs-All classifiers pros and cons:

Pros:

- Since they use binary classifiers, they are usually faster to converge

- Great when you have a handful of classes

Cons:

- It is really annoying to deal with when you have too many classes

- You really need to be careful when training to avoid class imbalances that introduce bias, e.g., if you have 1000 samples of

none and 3000 samples of the thumbs_up class.

Popular methods:

- SVMs

- Most ensemble methods

- Tree-based algorithms