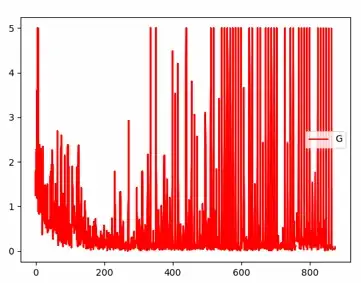

In my scenario, I use deep reinforcement learning to fix a problem that is related to transportation. During training, I plot the gradient and loss, I find that the gradient converges and then explodes while the loss can not converge all the time. I wonder what caused such result.

The fist graph indicates one of the output gradient and the second graph indicates the cumulative reward for each sample(red curve), and corresponding loss(blue curve)