This response isn't an answer to all of your questions but it does address some of them. First, while heuristics related to relative importance of features in ML are still in their infancy, this is not the case wrt classic, multivariate statistical modeling. For confirmation of this browse Ulrike Groemping's several papers and her R module, RELAIMPO, for an exhaustive review of the literature on relative variable importance, e.g., here ... https://cran.r-project.org/web/packages/relaimpo/relaimpo.pdf. Her work is enormously informative about the meaning and measurement of relative variable importance and should serve as the authoritative benchmark for any and all comparable ML approaches.

As the OP notes, Breiman's 2001 paper Random Forests (https://www.stat.berkeley.edu/users/breiman/randomforest2001.pdf) is one of the first suggestions wrt relative feature importance in the context of ML and it remains one of the best and most workable. One useful point to note is that while RFs were originally designed for use with CART trees, they are by no means limited to CART since any multivariate engine (e.g., logistic regression, OLS regression, econometric panel data models, whatever) can be plugged into an RF or, more generally, divide and conquer algorithm.

Another, more recent reference proposing a possible solution to relative feature importance is Sirignano, Sadwhani and Giesecke's paper titled Deep Learning and Mortgage Risk (here ...https://bfi.uchicago.edu/sites/default/files/file_uploads/Slides%20Giesecke.pdf). I would like to avoid getting too deeply into the weeds of their data and methodology but, to the authors credit, the explanations in their paper are quite clear and cogent -- there is no need to re-rehearse that in this short response. It's enough to note that at 2T of data spanning ~70% of residential US mortgages between 1995 and 2014 containing 120 million individual loans expanding out to ~3.5 billion monthly observations, Giesecke, et al's dataset is massive. Given that this much data is too big for evaluation on any machine on the planet, they employ a widely used divide and conquer workaround in creating millions of randomly drawn, bootstrapped "sequences of blocks of data," to enable estimation of their model(s) on an AWS, GPU-equipped, parallel machine.

Their approach is based on an ensemble of NNs with relative feature importance evaluated using LOOCV. However, as the authors note, their heuristic is limited by unmeasured sources of bias such as collinearity. That said, the problems with leveraging ML and, specifically, NN models for descriptive purposes run deeper than mere collinearity.

There are two key caveats to note in their approach. Taken together, these caveats greatly limit the potential for evaluating relative feature importance in the context of ML models:

1) They treat the ~3.5 billion records as iid and randomly draw bootstrapped samples based on that assumption

2) Their implementation of an ensemble of NNs requires features scaled in only 1 of 3 ways: continuous, dummy (0,1) and/or effect coded (1,0,-1) features. In other words, even simple multilevel categorical features such as 'state' require decomposition into 50 dummy (0,1) variables

First, treating all of the ~3.5 billion records as iid ignores the many dependencies and hierarchical relationships inherent to their data, e.g., classic time series issues such as autocorrelation, cointegration and stationarity, individual loans within zip code, zip code within county, both within state, and more. In short, while treating these ~3.5 billion observations as iid may be computationally efficient and produce useful (accurate) predictions, it is destroying variance structures essential to understanding the information for descriptive purposes.

Next, requiring that even small, multilevel categorical features such as 'state' be blown out into 50 dummy variables is also variance destroying. The comparison here is to R. A. Fisher's creation of ANOVA nearly 100 years ago and his treatment of blocks of categorical 'factors' such as soil type as a single independent variable. The issue becomes even worse wrt massively categorical features such as zip code which, in the context of NNs, necessitates creating 30,000+ dummy variables. Obviously, decomposing categorical information into a series of 0,1 dummies both disperses and decomposes a tremendous amount of valuable information into a virtually irrelevant string of variables -- no one cares about the impact of a few zip codes on a model. However, that same person should be interested in a composite, ANOVA-type factor summarizing their impact across all 30,000+ levels.

So, this response is suggesting in answer to your questions that:

1) ML models that ignore structure and variance for reasons of computational efficiency are not "sound approaches" for evaluating relative feature importance for descriptive purposes

2) "One-hot-encoding" may be necessary but is not useful for evaluating relative feature importance for descriptive purposes

3) "Are the same validation/optimization/parameter tuning methods to improve a predictive model relevant to such an 'explanatory model?'" Tuning methods able to capture important structural aspects in data are valid for use with explanatory models intended to evaluate relative feature importance for descriptive purposes

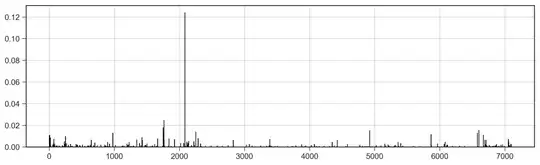

4) "The target variable has a very skewed distribution where 80% of items have a value of less than 3 and some a value of >100 - is that relevant?" Yes, this is highly relevant. Modeling sparse information is an area seeing a huge amount of research interest in the ML community. My view is that Nathan Kutz at the U of Washington is doing some of the best, if not the best work in this area. Check out his 2013 book Data-Driven Modeling & Scientific Computation: Methods for Complex Systems & Big Data which focuses on PDEs as well as his more recent, 1 hour Youtube video from June 2017 which has his more recent pubs related to these issues ... https://www.youtube.com/watch?feature=youtu.be&v=Oifg9avnsH4&app=desktop

/* Adding additional references to ML relative variable importance */

These references just popped up on AndrewGelman.com (11.25.17):

Using output from a fitted machine learning algorithm as a predictor in a statistical model

http://andrewgelman.com/2017/11/24/using-output-fitted-machine-learning-algorithm-predictor-statistical-model/

“Why Should I Trust You?” Explaining the Predictions of Any Classifier

http://www.kdd.org/kdd2016/papers/files/rfp0573-ribeiroA.pdf