I have learned that, when dealing with data using model-based approach, the first step is modeling data procedure as a statistical model. Then the next step is developing efficient/fast inference/learning algorithm based on this statistical model. So I want to ask which statistical model is behind the support vector machine (SVM) algorithm?

2 Answers

You can often write a model that corresponds to a loss function (here I'm going to talk about SVM regression rather than SVM-classification; it's particularly simple)

For example, in a linear model, if your loss function is $\sum_i g(\varepsilon_i) = \sum_i g(y_i-x_i'\beta)$ then minimizing that will correspond to maximum likelihood for $f\propto \exp(-a\,g(\varepsilon))$ $= \exp(-a\,g(y-x'\beta))$. (Here I have a linear kernel)

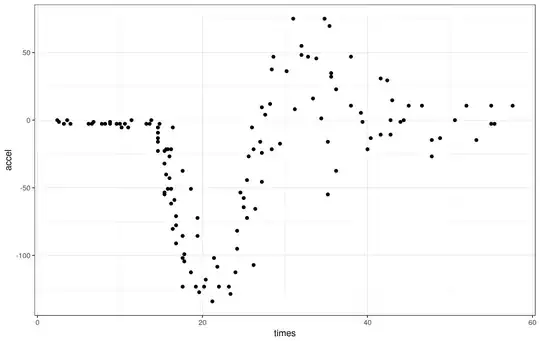

If I recall correctly SVM-regression has a loss function like this:

That corresponds to a density that is uniform in the middle with exponential tails (as we see by exponentiating its negative, or some multiple of its negative).

There's a 3 parameter family of these: corner-location (relative insensitivity threshold) plus location and scale.

It's an interesting density; if I recall rightly from looking at that particular distribution a few decades ago, a good estimator for location for it is the average of two symmetrically-placed quantiles corresponding to where the corners are (e.g. midhinge would give a good approximation to MLE for one particular choice of the constant in the SVM loss); a similar estimator for the scale parameter would be based on their difference, while the third parameter corresponds basically to working out which percentile the corners are at (this might be chosen rather than estimated as it often is for SVM).

So at least for SVM regression it seems pretty straightforward, at least if we're choosing to get our estimators by maximum likelihood.

(In case you're about to ask ... I have no reference for this particular connection to SVM: I just worked that out now. It's so simple, however, that dozens of people will have worked it out before me so no doubt there are references for it -- I've just never seen any.)

- 257,508

- 32

- 553

- 939

-

2(I answered this earlier elsewhere but I deleted that and moved it here when I saw you also asked here; the ability to write mathematics and include pictures is much better here -- and the search function is better too, so it's easier to find in a few months) – Glen_b Nov 14 '17 at 07:44

-

+1 actually this is very strange that it is that hard to find this in print. Is seems that everyone is discussing SVM in model-free terms. – Tim Nov 14 '17 at 10:02

-

2+1, plus the vanilla SVM also has a Gaussian prior on its parameters through the $\ell_2$-norm. – Firebug Nov 14 '17 at 10:15

-

2If the OP is asking about SVM, s/he is probably interested in classification (which is the most common application of SVMs). In that case the loss is [hinge loss](https://en.m.wikipedia.org/wiki/Hinge_loss) which is a bit different (you don't have the increasing part). Concerning the model, I heard academics saying at conference that SVMs were introduced to perform classification **without** having to use a probabilistic framework. Probably that's why you can't find references. On the other hand, you can and you do recast hinge loss minimization as empirical risk minimization - which means... – DeltaIV Nov 14 '17 at 10:32

-

...that someone has cooked up a probabilistic model. I will look ELS up later - maybe they have something. – DeltaIV Nov 14 '17 at 10:33

-

4Just because you don't have to have a probabilistic framework... doesn't mean what you're doing doesn't correspond to one. One can do least squares without assuming normality, but it's useful to understand that's what it's doing well at ... and when you're nowhere near it that's it may be doing much less well. – Glen_b Nov 14 '17 at 10:34

-

2@Delta on the hinge loss, in a regression framework I think that's quantile regression https://stats.stackexchange.com/questions/251600/quantile-regression-loss-function ... – Glen_b Nov 14 '17 at 10:36

-

1It may be possible to do something with a decision theory framework and back out a distributional assumption from a Bayesian approach, but that sounds pretty close to what DeltaIV is getting at above. – Glen_b Nov 14 '17 at 11:09

-

1@CagdasOzgenc It's not that it doesn't _"emit correct probabilities"_, it does not output probabilities at all. The only output is the (signed) distance to the margin. – Firebug Nov 14 '17 at 12:16

-

SVM is instrinsically linked to quantile regression, to the point regularized quantile regression is often performed using adapted SVM solvers, and sometimes distributed within the same frameworks. – Firebug Nov 14 '17 at 12:21

-

@Glen_b sure - I'm not saying you **can't** cast SVM for classification in a probabilistic framework, I'm just saying that researchers in the field told me they were introduced precisely to make do w/o probability theory (see also Firebug's comment on SVMs not predicting class probabilities, confirmed also by ESL). Bayesian decision theory is exactly what I was getting at - do you have ESP powers? :) Here's the relevant NIPS paper: http://people.ee.duke.edu/~lcarin/svm_nips2014.pdf I won't talk about SVMs for regression (which I know as SVR) because I know too little about them. – DeltaIV Nov 14 '17 at 12:27

-

It's kind of funny - in Machine Learning, we have *generative models* for classification, i.e., methods which try to estimate the *joint distribution* of predictors and labels (e.g., LDA). Then we have *discriminative models*, i.e., models which estimate the *conditional distribution* of labels given predictors (e.g., logistic regression). SVMs, in a way, are "less than discriminative" models - they don't even care to estimate the conditional probabilities (at least in the optimization interpretation), they just estimate the position of the maximum margin hyperplane (or manifold). – DeltaIV Nov 14 '17 at 12:39

-

2@DeltaIV There is a reason for that. As I explained here https://stats.stackexchange.com/questions/208529/when-is-it-appropriate-to-use-an-improper-scoring-rule/251654#251654 under misspecification (which is almost always) these approaches are more fail safe. – Cagdas Ozgenc Nov 14 '17 at 14:05

-

@Mehrdad I don't see you mentioning hinge loss, or empirical risk minimization, or the Carin's team paper. But the part on generative, discriminative and "less than discriminative" models is actually identical! I didn't read your question before writing my comments. Could it be that we were at the same conference and/or talked to the same people? Anyway, it's a pretty common interpretation - I think either Bishop's book or Andrew Ng's course mention that SVM are neither generative or discriminative, but I cannot check right now. – DeltaIV Nov 14 '17 at 22:52

-

1@DeltaIV: Haha, well do note that I said repeating *in* your comments, not entirely comprising them. =P I can't rule out that we've talked to the same people (though it seems unlikely) but I can tell you pretty much definitely that we haven't been to the same conferences. :-) And I haven't had read/taken/listened/any-other-verb'd anything from Andrew Ng or Bishop haha. – user541686 Nov 14 '17 at 23:37

-

3Maybe http://www.icml-2011.org/papers/386_icmlpaper.pdf is a reference for this?(I've only skimmed it) – Lyndon White Nov 15 '17 at 06:52

-

1@Lyndon Sure looks like it. If nobody writes that up I will put something from it into my answer when time permits. – Glen_b Nov 15 '17 at 07:26

I think someone already answered your literal question, but let me clear up a potential confusion.

Your question is somewhat similar to the following:

I have this function $f(x) = \ldots$ and I'm wondering what differential equation it is a solution to?

In other words, it certainly has a valid answer (perhaps even a unique one if you impose regularity constraints), but it's a rather strange question to ask, since it was not a differential equation that gave rise to that function in the first place.

(On the other hand, given the differential equation, it is natural to ask for its solution, since that's usually why you write the equation!)

Here's why: I think you're thinking of probabilistic/statistical models—specifically, generative and discriminative models, based on estimating joint and conditional probabilities from data.

The SVM is neither. It's an entirely different kind of model—one that bypasses those and attempts to directly model the final decision boundary, the probabilities be damned.

Since it's about finding the shape of the decision boundary, the intuition behind it is geometric (or perhaps we should say optimization-based) rather than probabilistic or statistical.

Given that probabilities aren't really considered anywhere along the way, then, it's rather unusual to ask what a corresponding probabilistic model could be, and especially since the entire goal was to avoid having to worry about probabilities. Hence why you don't see people talking about them.

- 1,075

- 1

- 9

- 21

-

6I think you are discounting the value of statistical models underlying your procedure. The reason it is useful is that it tells you what assumptions are behind a method. If you know these, you are able to understand which situations it will struggle and when it will thrive. You are also able to generalise and extend svm in a principled manner if you have the underlying model. – probabilityislogic Nov 14 '17 at 10:05

-

3@probabilityislogic: *"I think you are discounting the value of statistical models underlying your procedure."* ...I think we're speaking past each other. What I am trying to say is that there *isn't* a statistical model behind the procedure. I am *not* saying that it's not possible to come up with one that fits it a posteriori, but I'm trying to explain that it was not "behind" it in any way, but rather "fit" to it *after the fact*. I am also **not** saying that doing such a thing is useless; I agree with you that it could end up with tremendous value. Please bear these distinctions in mind. – user541686 Nov 14 '17 at 10:35

-

1@Mehrdad: *I am not saying that it's not possible to come up with one that fits it a posteriori,* The order in which the pieces of what we call the svm 'machine' were assembled (what problem the humans who designed it were originally trying to solve) is interesting from an history of science point of view. But for all we know there might be an yet unknown manuscript in some library containing a description of the svm engine from 200 years ago which attacks the problem from the angle Glen_b explored. Maybe the notions of *a posteriori* and *after the fact* are less dependable in science. – user603 Nov 14 '17 at 12:37

-

1@user603: It's not just the history that's the problem here. The historical aspect is only half of it. The other half is how it's normally actually derived in reality. It starts as a geometry problem and ends with an optimization problem. Nobody starts with the probabilistic model in the derivation, meaning the probabilistic model was in no sense "behind" the result. It's like claiming Lagrangian mechanics is "behind" F = ma. Maybe it can lead to it, and yes it is useful, but no, it is not and never was the basis of it. In fact the ***entire goal*** was to *avoid* probability. – user541686 Nov 14 '17 at 19:43