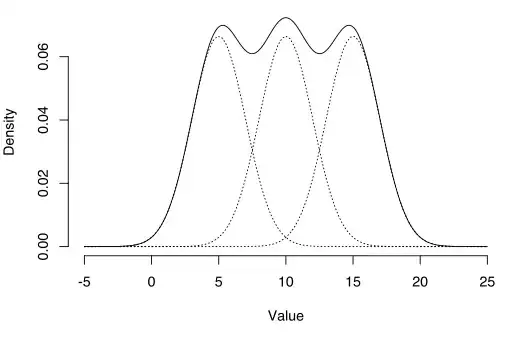

Lets say I have a bunch of dogs of different breeds $i = 1, 2, ..., n$. The probability of a random dog being of breed $i$ is $p_i$. The weight (in kg) of a dog of breed $i$ is $N(\mu_i, \sigma_i^2)$. How do I calculate the variance, skewness and kurtosis of the distribution of the weight of a random dog from a random breed?

Or, put differently, how do I calculate the variance, skewness and kurtosis for a weighted sum of normal distributions? Can it be done mathematically or do I need to use a Monte Carlo method?