I think you are confusing two x in your mind possibly:$\def\x{\mathbf{x}}\def\z{\mathbf{z}}$

- there is the single vector $\mathbf{x}$ associated with a new example for which you want the probability distribution, given the parameters $\theta$. Let's denote this $\x'$

- with no additional information, this will simply reflect your priors

- there is the evidence, a set of training data, $\mathcal{X}$

- by taking into account this evidence, you can improve the estimate for the distribution of $\z'$, $\theta$, $\x'$, and so on, conditioned on various combinations of the other variables

- these will be posterior distributions (posterior, updated with the provided evidence, $\mathcal{X}$)

- we can see they are posterior, since they will be conditioned on $\mathcal{X}$

So, what you are looking for, I reckon is $\def\X{\mathcal{X}}p(\x' \mid \theta, \X)$, ie conditioned on the training data, the evidence, $\X$.

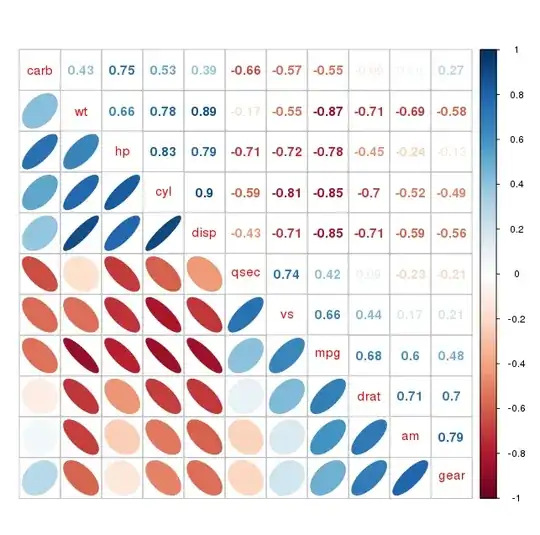

Here's a diagram of one way of depicting these variables:

Looking at this diagram, and thinking about your question, we probably want to do in sequence:

- obtain the posterior $p(\z \mid \mathcal{X}, \theta)$

- use this posterior to obtain $p(\x' \mid \theta, \X)$

However... thinking this through a bit, I'm not sure we can just 'infer' a distribution: I think what we want to do is postulate a parametric family of distributions for $p(\z)$, eg using $\phi$ as the parameters, this would be:

$$

p(\z \mid \phi) = f_\phi(\z)

$$

... where $f(\cdot)$ is some parametric probability distribution we choose. We'd then learn $\phi$ using Bayesian inference.

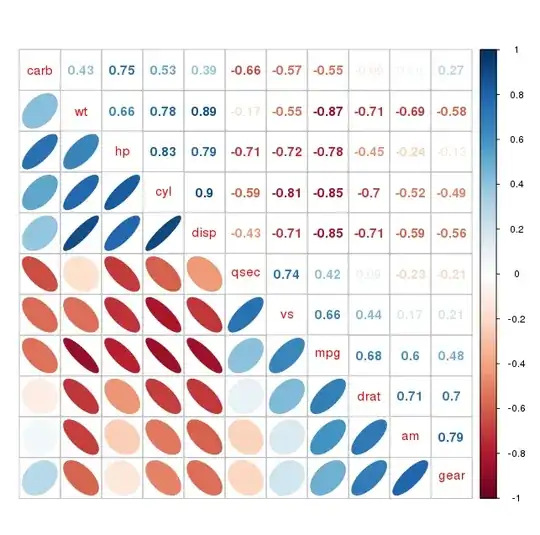

Our diagram will become:

Now things start to become fairly standard I think. We need to use Bayesian inference to estimate a distribution for $p(\phi \mid \X)$, and then slot this into standard likelihood-type formulae, to obtain the final expression for $p(\x' \mid \theta, \X)$, which we now see is something more like the marginal over $p(x', \phi \mid \theta, \X)$.

Let's start by writing down expressions we know. We have:

- a prior $p(\phi)$, eg some uniformed prior, standard Gaussian etc

- distribution of $\z$ given $\phi$, $p(\z \mid \phi)$

- prior over $\theta$, $p(\theta)$

- likelihood function $p(\x \mid \z, \theta)$

Let's express the likelihood function in terms of $\phi$. Working our way forwards we have:

- prior $p(\phi)$

- $p(\z, \phi) = p(\z \mid \phi)p(\phi)$

- $p(\x, \z, \theta, \phi) = p(\x \mid \z, \phi) p(\z \mid \phi)p(\phi)$

Let's write down the joint, and start to factorize:

$$

p(\x, \z, \theta, \phi) = p(\x, \z, \phi \mid \theta)p(\theta)

$$

$$

= p(\x, \z \mid \theta, \phi)p(\phi \mid \theta) p(\theta)

$$

$\phi$ is independent of $\theta$, so we have:

$$

p(\x, \z, \theta, \phi) = p(\x, \z \mid \theta, \phi) p(\phi)p(\theta)

$$

$$

= p(\x \mid \z, \theta, \phi)p(\z \mid \theta, \phi) p(\phi)p(\theta)

$$

$\z$ is independent of $\theta$. $\x$ is conditionally independent of $\phi$, given $\z$. So we have:

$$

p(\x, \z, \theta, \phi) = p(\x \mid \z, \theta) p(\z \mid \phi) p(\phi) p(\theta)

$$

We have closed-form expressions for each of the terms on the right-hand side, ie the likelihood, the priors over the parameters, and the distribution of $\z$ given $\phi$.

Let's start to consider the evidence, $\X$. We can decompose the evidence into individual examples:

- $\X = \{ \mathbf{X}_1, \mathbf{X}_2, \dots, \mathbf{X}_n \}$

- $\mathcal{Z} = \{ \mathbf{Z}_1, \mathbf{Z}_2, \dots, \mathbf{Z}_n \}$

So we have for example:

$$

p(\X) = \prod_{i=1}^n p(\mathbf{X}_i)

$$

We want to obtain:

$$

p(\phi \mid \X) = p(\phi \mid \mathbf{X}_1, \mathbf{X}_2, \dots, \mathbf{X}_n)

$$

By Bayes Rule we have:

$$

p(\phi \mid \X) = \frac{p(\X \mid \phi)p(\phi)}

{p(\X)}

$$

$p(\phi)$ is just the prior, so that easy. The other expressions will take some more work. For the numerator, we'll need to marginalize. But before we do that, we note that in the ultimate target expression, for the original question, we are conditioning on $\phi$, ie we want $p(\x' \mid \theta, \X)$. So, let's condition on $\theta$ here too:

$$

p(\phi \mid \X, \theta) = \frac{p(\X \mid \phi, \theta)p(\phi \mid \theta)}

{p(\X \mid \theta)}

$$

$\phi$ is independent of $\theta$, so we have:

$$

p(\phi \mid \X, \theta) = \frac{p(\X \mid \phi, \theta)p(\phi)}

{p(\X \mid \theta)}

$$

where $p(\phi)$ is just the prior over $\phi$, as before.

The denominator is the marginal:

$$

E_1 = p(\X \mid \theta) = \prod_{i=1}^n p(\mathbf{X}_i \mid \theta)

= \prod_{i=1}^n \int_\z \int_\phi p(\mathbf{X}_i, \z, \phi \mid \theta)\,d\phi\,d\z

$$

The first term on the numerator is another marginal:

$$

E_2 = p(\X \mid \phi, \theta) = \prod_{i=1}^n p(\mathbf{X}_i \mid \phi, \theta)

= \prod_{i=1}^n \int_\z p(\mathbf{X}_i, \z \mid \phi, \theta)\,d\z

$$

As for how to solve these marginalizations, I think I will leave that to you for now. However, I think the answer to your original question is:

- no, we cant just form an expectation in the way you are doing

- we need to introduce a parametric distribution over $\z$, eg parameterized by $\phi$, and perform Bayesian inference to estimate $\phi$, or a distribution over $\phi$

- in addition, I think it's important to distinguish between the $\x'$ of a new example for which you want a probability distribution, and the $\X$ that forms your evidence, based on which you will form posterior distributions, eg for $\phi$