I know there is same question here. But I couldn't get a satisfactory answer and I want to ask something different.

In Skip-gram model, 2nd answer of above post and Word2Vec Explained say the same thing that output C multinomial distributions are generated by same hidden -> output weight matrix.

1) By same N by V dimension matrix, how can different output vectors be generated?

What I figured out was, same C output vectors are generated and than cross entropy losses are calculated by C different true labels. For example, in 'the cat jumped over the table', given 'jumped' as an input, it is fed forward through the network to produce an output vector O. Then the losses are computed with O compared with 'cat', 'over' vectors, when C = 2. Then we sum up the losses and back-propagate using that summed-up loss to update the shared weight matrix.

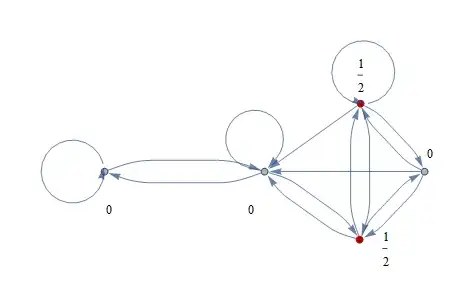

2) Figure below is from Word2vec explained by Xin Rong.

I don't think I understand the meaning of u_c,j.

What is the 'net input' of j-th unit of c-th panel of output layer?