At my job I am working on standardizing some data. Right now we are simply using z-scores and it's causing some problems. For instance, one outlier has had three four or five standard deviation moves this week! It's clearly not right.

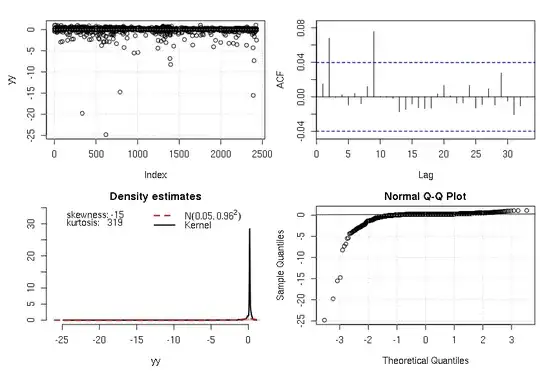

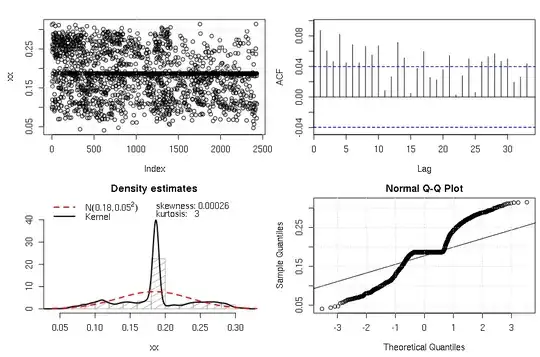

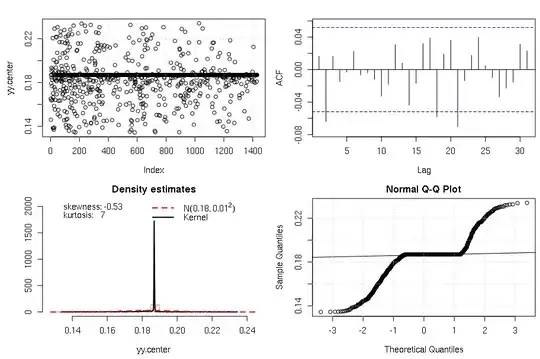

The empirical distribution (pre-transformed) I'm working with has a kurtosis of 27 and fails every test for normality. I would like to transform this to something closer to a Gaussian distribution. I did some research and found this thread How to transform leptokurtic distribution to normality?, but it doesnt seem applicable to me.

The top comment recommends using the Lambert's W distribution, but the delta parameter seems to be the key and isn't working. His example uses the value .2 while I am using 1/27. His 'yy' variable fails the test for normality while my 'yy' variable passes, invalidating the results. There's also the 'median-subtracted third root' transform, but no chance ill be able to sell that to my boss.

can anyone help me with this? here's a vector I'm working with At my job I am working on standardizing some data. Right now we are simply using z-scores and it's causing some problems. For instance, one outlier has had several four or five standard deviation moves this week! It's clearly not right.

The empirical distribution (pre-transformed) I'm working with has a very high kurtosis and fails every test for normality. I would like to transform this to something closer to a Gaussian distribution. I did some research and found this thread How to transform leptokurtic distribution to normality?, but it doesnt seem applicable to me.

The top comment recommends using the Lambert's W distribution, but the delta parameter seems to be the key and isn't working. His example uses the value .2 while I am using 1/(realized kurtosis). His 'yy' variable fails the test for normality while my 'yy' variable passes, invalidating the results. There was also the 'median-subtracted third root' transformation, but no chance ill be able to sell that to my boss.

can someone help me with this transformation? here's my data https://www.dropbox.com/s/r31nub55umacf06/data.csv?dl=0