When increasing the value of the latent variable k for LDA (latent Dirichlet allocation), how should perplexity behave:

- On the training set?

- On the testing set?

When increasing the value of the latent variable k for LDA (latent Dirichlet allocation), how should perplexity behave:

The original paper on LDA gives some insights into this:

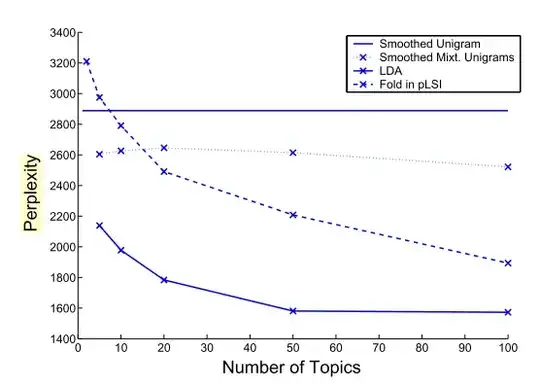

In particular, we computed the perplexity of a held-out test set to evaluate the models. The perplexity, used by convention in language modeling, is monotonically decreasing in the likelihood of the test data, and is algebraicly equivalent to the inverse of the geometric mean per-word likelihood. A lower perplexity score indicates better generalization performance.

This should be the behavior on test data. Here is a result from paper: