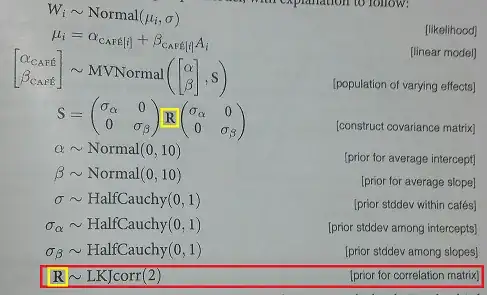

The LKJ distribution is an extension of the work of H. Joe (1). Joe proposed a procedure to generate correlation matrices uniformly over the space of all positive definite correlation matrices. The contribution of (2) is that it extends Joe's work to show that there is a more efficient manner of generating such samples.

The parameterization commonly used in software such as Stan allows you to control how closely the sampled matrices resemble the identity matrices. This means you can move smoothly from sampling matrices that are all very nearly $I$ to matrices which are more-or-less uniform over PD matrices.

An alternative manner of sampling from correlation matrices, called the "onion" method, is found in (3). (No relation to the satirical news magazine -- probably.)

Another alternative is to sample from Wishart distributions, which are positive semi-definite, and then divide out the variances to leave a correlation matrix. Some downsides to the Wishart/Inverse Wishart procedure are discussed in Downsides of inverse Wishart prior in hierarchical models

(1) H. Joe. "Generating random correlation matrices based on partial correlations." Journal of Multivariate Analysis, 97 (2006), pp. 2177-2189

(2) Daniel Lewandowski, Dorota Kurowicka, Harry Joe. "Generating random correlation matrices based on vines and extended onion method." Journal of Multivariate Analysis, Volume 100, Issue 9, 2009, Pages 1989-2001

(3) S. Ghosh, S.G. Henderson. "Behavior of the norta method for correlated random vector generation as the dimension increases." ACM Transactions on Modeling and Computer Simulation (TOMACS), 13 (3) (2003), pp. 276-294