After a while, I came back to this question that has not received an answer so far.

I was able to give a formal proof of the statement (finally).

I will give one in the one dimensional case.

The proof with $k$ regressors is simply an extension and does not affect the proof below.

Let's begin stating the hypotesis

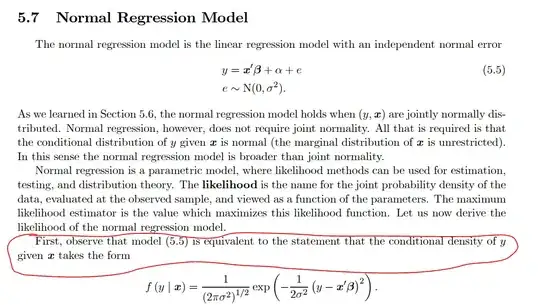

$Y = \beta X + e $

$e \sim N(0, \sigma^2)$

$X$ and $e$ are independent

We want to prove that $ f_{Y \mid X}(y \mid x)$ is $N(\beta x, \sigma^2)$

We are not making any assumption about the distribution of $X$, and I want to point that $X$ is a RANDOM unlike many people said that $X$ is considered FIXED.

Consequently $X$ has a probability distribution. That being said let's begin with the proof:

$f_{Y \mid X}(y \mid x) = \dfrac{f_{YX}(y,x)}{f_{X}(x)}$

$f_{YX}(y,x)=f_{YX}(\beta X + e,x) = f_{eX}(y- \beta X,x)$

the last equality is true because it is a simple bivariate transformation

The independence of $e$ and $X$ implies that

$f_{eX}(y- \beta X,x) = f_e(y- \beta X)f_X(x)$

Finally, since $e \sim N(0, \sigma^2)$

$f_{Y \mid X}(y \mid x) = f_e(y- \beta X) = \dfrac{1}{2 \sigma \sqrt{(\pi)}}exp{\bigg(\dfrac{1}{2} \Big(\dfrac{y -\beta x}{\sigma} \Big)^2 \bigg)} $

This conclude the proof since the last equality implies that.

$ Y \mid X \sim N(\beta x, \sigma^2)$