"Find out" indicates you are exploring the data. Formal tests would be superfluous and suspect. Instead, apply standard exploratory data analysis (EDA) techniques to reveal what may be in the data.

These standard techniques include re-expression, residual analysis, robust techniques (the "three R's" of EDA) and smoothing of the data as described by John Tukey in his classic book EDA (1977). How to conduct some of these are outlined in my post at Box-Cox like transformation for independent variables? and In linear regression, when is it appropriate to use the log of an independent variable instead of the actual values?, inter alia.

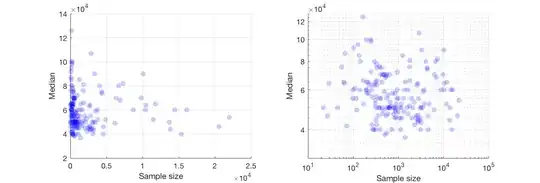

The upshot is that much can be seen by changing to log-log axes (effectively re-expressing both variables), smoothing the data not too aggressively, and examining residuals of the smooth to check what it might have missed, as I will illustrate.

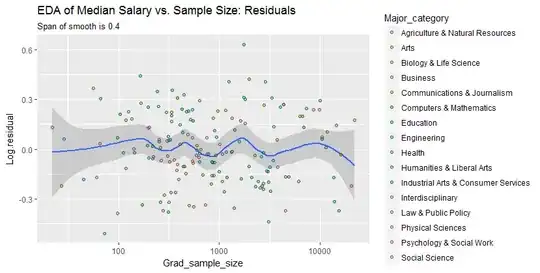

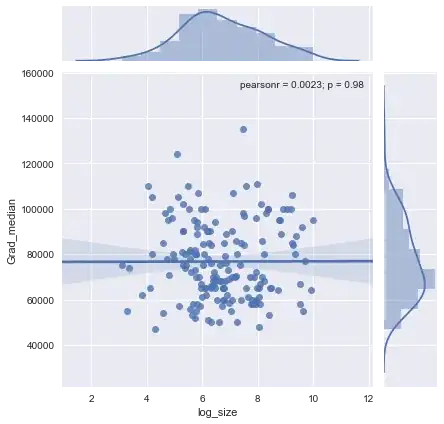

Here are the data shown with a smooth that--after examining several smooths with varying degrees of fidelity to the data--seems like a good compromise between too much and too little smoothing. It uses Loess, a well-known robust method (it is not heavily influenced by vertically outlying points).

The vertical grid is in steps of 10,000. The smooth does suggest some variation of Grad_median with sample size: it seems to drop as sample sizes approach 1000. (The ends of the smooth are not trustworthy--especially for small samples, where sampling error is expected to be relatively large--so don't read too much into them.) This impression of a real drop is supported by the (very rough) confidence bands drawn by the software around the smooth: its "wiggles" are greater than the widths of the bands.

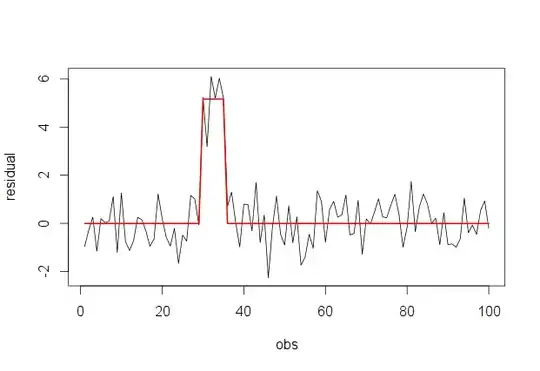

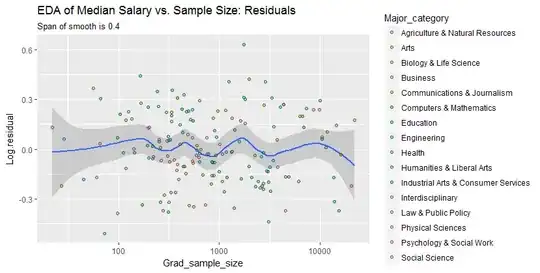

To see what this analysis might have missed, the next figure looks at the residuals. (These are differences of natural logarithms, directly measuring vertical discrepancies between data the preceding smooth. Because they are small numbers they can be interpreted as proportional differences; e.g., $-0.2$ reflects a data value that is about $20\%$ lower than the corresponding smoothed value.)

We are interested in (a) whether there are additional patterns of variation as sample size changes and (b) whether the conditional distributions of the response--the vertical distributions of point positions--are plausibly similar across all values of sample size, or whether some aspect of them (like their spread or symmetry) might change.

This smooth tries to follow the datapoints even more closely than before. Nevertheless it is essentially horizontal (within the scope of the confidence bands, which always cover a y-value of $0.0$), suggesting no further variation can be detected. The slight increase in the vertical spread near the middle (sample sizes of 2000 to 3000) would not be significant if formally tested, and so it surely is unremarkable in this exploratory stage. There is no clear, systematic deviation from this overall behavior apparent in any of the separate categories (distinguished, not too well, by color--I analyzed them separately in figures not shown here).

Consequently, this simple summary:

median salary is about 10,000 lower for sample sizes near 1000

adequately captures the relationships appearing in the data and seems to hold uniformly across all major categories. Whether that is significant--that is, whether it would stand up when confronted with additional data--can only be assessed by collecting those additional data.

For those who would like to check this work or take it further, here is the R code.

library(data.table)

library(ggplot2)

#

# Read the data.

#

infile <- "https://raw.githubusercontent.com/fivethirtyeight/\

data/master/college-majors/grad-students.csv"

X <- as.data.table(read.csv(infile))

#

# Compute the residuals.

#

span <- 0.6 # Larger values will smooth more aggressively

X[, Log.residual :=

residuals(loess(log(Grad_median) ~ I(log(Grad_sample_size)), X, span=span))]

#

# Plot the data on top of a smooth.

#

g <- ggplot(X, aes(Grad_sample_size, Grad_median)) +

geom_smooth(span=span) +

geom_point(aes(fill=Major_category), alpha=1/2, shape=21) +

scale_x_log10() + scale_y_log10(minor_breaks=seq(1e4, 5e5, by=1e4)) +

ggtitle("EDA of Median Salary vs. Sample Size",

paste("Span of smooth is", signif(span, 2)))

print(g)

span <- span * 2/3 # Look for a little more detail in the residuals

g.r <- ggplot(X, aes(Grad_sample_size, Log.residual)) +

geom_smooth(span=span) +

geom_point(aes(fill=Major_category), alpha=1/2, shape=21) +

scale_x_log10() +

ggtitle("EDA of Median Salary vs. Sample Size: Residuals",

paste("Span of smooth is", signif(span, 2)))

print(g.r)