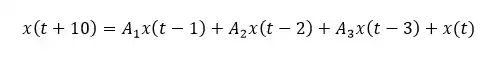

I am trying to design a neural network for time series forecasting using LSTM neurons.

I am stuck because the many different configurations that I tried so far are not performing well (actually they perform really really bad).

So I am starting to think that I'm missing something :).

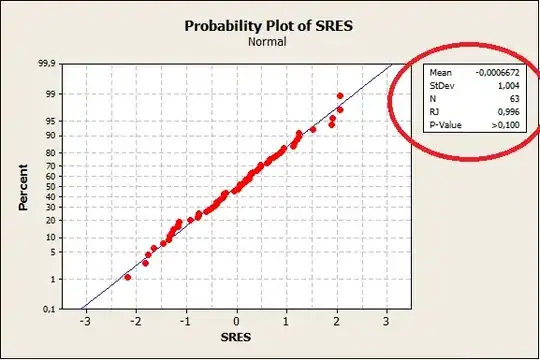

This is the time series that I am using for training. I have a similar time series for testing (the training consists in 2016 data and test is 2017):

What I want is to take T steps of my time series and predict the following P steps, so I converted the problem in a multi-output regression problem in order to solve it using supervised learning: suppose I want to predict the series from time a+1 to time a+P, the features of my dataset are the steps from a-T to a, (by consequence my ys for training are the steps from a+1 to a+P)

As only preprocessing step, I applied different types of scaling (using scikitLearn:

stdscaler = StandardScaler()

minmaxScaler = MinMaxScaler(feature_range=(-1, 1))

robustscaler = RobustScaler()

Sometimes I combined these methods, but I have always got bad results in term of network performance.

After preprocessing and reshaping, I designed the following network:

# create and fit the LSTM network

model = Sequential()

model.add(LSTM(NODES_L1, return_sequences=True, input_shape=(1, SEQ_LEN), stateful=True, batch_size=BATCH_SIZE))

model.add(Activation(ACTIVATION_L1))

model.add(Dropout(DROPOUT_L1))

model.add(LSTM(NODES_L2, stateful=True))

model.add(Activation(ACTIVATION_L2))

model.add(Dropout(DROPOUT_L2))

model.add(Dense(OUTPUT_TIMESTEPS, activation='linear'))

adam = Adam(lr=0.0001)

model.compile(loss='mean_squared_error', optimizer=adam)

callbacks = [

EarlyStopping(monitor='val_loss', min_delta=0.00001, patience=5, verbose=0, mode='auto')

]

model.summary()

model.fit(

trainX,

trainY,

shuffle=False,

batch_size=BATCH_SIZE,

epochs=EPOCHS,

validation_data=(validX, validY),

verbose=2,

callbacks=callbacks)

Two LSTM layers and one dense at the end to predict the time steps. The validation loss and the loss are descending quite well; what I see when I train the network is the following output:

4s - loss: 3.8233 - val_loss: 0.6118

Epoch 2/100

3s - loss: 3.4985 - val_loss: 0.6668

Epoch 3/100

3s - loss: 3.1761 - val_loss: 0.6724

Epoch 4/100

3s - loss: 2.7586 - val_loss: 0.5258

Epoch 5/100

3s - loss: 2.3404 - val_loss: 0.3424

Epoch 6/100

3s - loss: 2.0137 - val_loss: 0.2554

Epoch 7/100

3s - loss: 1.7479 - val_loss: 0.1697

Epoch 8/100

3s - loss: 1.5935 - val_loss: 0.1564

Epoch 9/100

3s - loss: 1.3824 - val_loss: 0.1206

Epoch 10/100

3s - loss: 1.2645 - val_loss: 0.0699

Epoch 11/100

3s - loss: 1.1752 - val_loss: 0.0619

Epoch 12/100

3s - loss: 1.1283 - val_loss: 0.0711

And so on (until early stop kills everything).

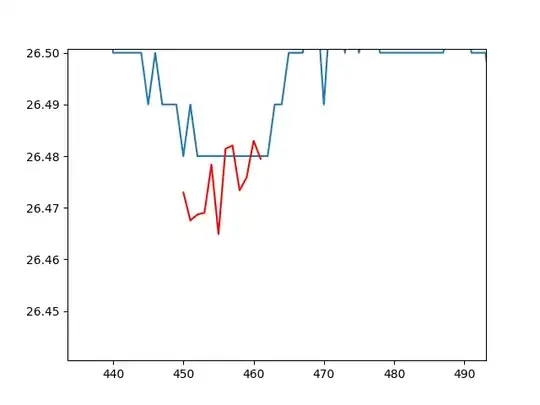

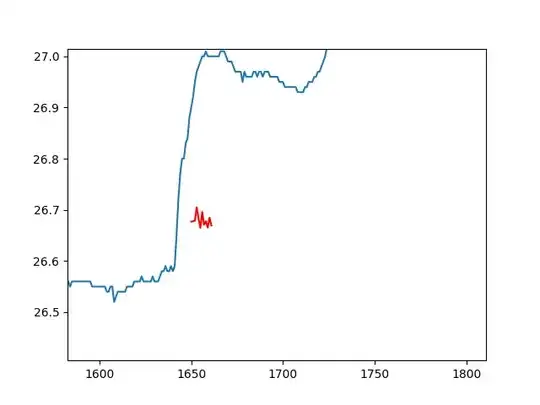

After the training step, I try to do some predictions, here the results:

Not even close.

I tried to find the best hyperparamters with hyperopt, here the configuration I used (maybe I am wrong on the dimension of the network):

fspace = {

'SEQ_LEN': hp.choice('SEQ_LEN', range(5, 48)),

'NODES_L1': hp.choice('NODES_L1', range(1, 80)),

'NODES_L2': hp.choice('NODES_L2', range(1, 80)),

'DROPOUT_L1': hp.uniform('DROPOUT_L1', 0.05, 0.8),

'DROPOUT_L2': hp.uniform('DROPOUT_L2', 0.05, 0.8),

'BATCH_SIZE': hp.choice('BATCH_SIZE', range(25, 150)),

'ACTIVATION_L1': hp.choice('ACTIVATION_L1', ['relu', 'tanh']),

'ACTIVATION_L2': hp.choice('ACTIVATION_L2', ['relu', 'tanh']),

'EPOCHS': hp.choice('EPOCHS', range(15, 1000))

}

Where SEQ_LEN is the number of T timesteps used as features.

The question is: how can I improve my process? What I am doing wrong? I double checked my code and all data seems to be loaded correctly, so I think it's a methodological problem.

Thank you for any clue ;)