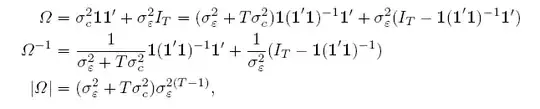

This question is related to this one, but it is different. I tested two different learning methods on a fixed convolutional neural network structure (with the same hyper-parameters). As it is clear from the following plot, the prob method obviously overfits the training data, since its training error is nearly zero after the third epoch. But, its validation error is also lower than the naive method. My question is this: Can we say that the prob method is better than the naive one, even though it suffers more seriously than the naive method from overfitting?

Asked

Active

Viewed 87 times

2

Hossein

- 3,170

- 1

- 16

- 32

2 Answers

2

Depends on what you mean by "better". If all you care about is validation error, then "prob" is clearly better than "naive" (although they get close and it looks like you could train "naive" for a few more epochs - the validation error is still going down - it may even outperform "prob"?)

But it is worth exploring what kind of examples "prob" and "naive" get wrong. If they are wrong in different ways (which is what was suggested in the other thread), ensembling the two models may give you even better results.

rinspy

- 3,188

- 10

- 40

1

Overfitting is when the training error goes down but the validation error goes up. In your graph, it doesn't look like the validation error is going up very much at all. I think you don't have an overfitting problem here.

Aaron

- 3,025

- 14

- 24

-

Thanks for your response. I think another definition of overfitting is when there are a significant difference between training and validation errors. By this definition, I have the overfitting problem. – Hossein Aug 15 '17 at 16:41

-

By that definition, you do have overfitting but it's not necessarily a problem :) – rinspy Aug 15 '17 at 16:44

-

There can be other reasons for why there is a gap between training and validation errors. For example, there can be a mismatch between the training and the validation data. – Aaron Aug 15 '17 at 17:00

-

@Aaron Isn't "mismatch between the training and validation data" what we call "noise"? I.e. it is still the same overfitting, it's just that increasing the number of samples will not increase the signal-to-noise ratio. See also this answer: https://stats.stackexchange.com/questions/274952/how-to-assess-if-my-training-examples-are-too-similar/283697#283697 – rinspy Aug 16 '17 at 07:55