For practical reasons I need to eliminate some columns of a matrix based on how close they are to being a linear combination of other columns (Not looking for a regularizing method like LASSO or anything here -- the constraints are related to hardware capabilities). I feel like this should be a standard test of some kind as opposed to a custom for-loop over the columns in which I fit a linear model of the other variables to get an L2 distance (or similar metric) to sort by. Ideally it could be done in an iterative fashion down to a certain number of columns (features) that I preset. Is there some standard method for this?

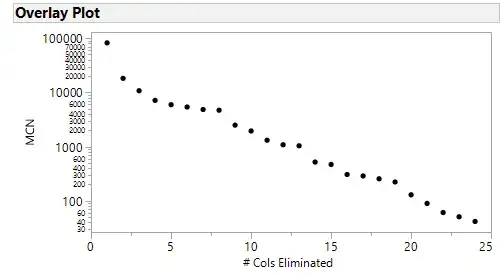

Note #1: I did try iterative elimination of columns based on the column which caused the largest decrease in matrix condition number as recommended by some (I standardized all the columns first). I found the pattern very interesting in that it wasn't a smooth curve (after logrithmic transformation) but had "intervals" if you will of decaying change with a sort of discontinuous jump between them. Is this expected behavior?

Note #2: I also took another look at PCA in terms of the Eigenvectors associated with the smallest eigenvalue, but the process of sequential elimination wasn't as obvious as the matrix condition number.