I'm training a CNN model for binary classification. The obtained confusion matrix and some corresponding performance measures on validation set are as follows:

ans =

11046 177

561 9306

accuracy: 0.965007

sensitivity: 0.943144

specificity: 0.984229

precision: 0.981335

recall: 0.943144

f_measure: 0.961860

gmean: 0.963467

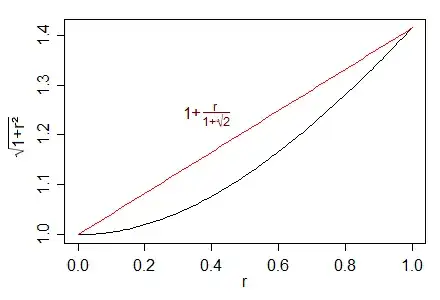

It seems the results are good. But the training loss seems bad, as shown as follows:

Is this a good model?