I have a small sample of marks obtained by a group of students and I would like to apply a binomial test to check if more than half of the size of the classroom has failed due to the application of a new technique for teaching music named X. My data is in vigesimal format and is the following:

data1=c(11,4,6,2,11,7,1,9,1,13,1,13,10,7,10,12,13,4,1,14,1,9,1,10,4)

data2=c(10,4,10,5,15,10,17,15,15,11,14,9,12,15,15,10,8,3,12)

The first set of data followed the course by using this new technique, while in the second set are those students that follow the traditional methodology. In this system, a mark of 11 or greater is considered for passing the course.

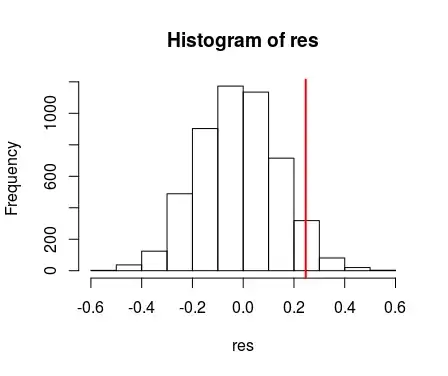

I have applied hist() and qqnorm() from R and I have the suspicion that the data is not normally distributed.

When I was checking about hypothesis testing I have been reading about the bootstrapping technique for small samples in:

http://www.stat.ucla.edu/~rgould/110as02/bshypothesis.pdf

The question that I have is how can I apply bootstrapping by using R to check it up if my hypothesis holds or not. I mean that the new methodology will make more students to fail the subject. Also, I have read about the possibility of doing permutation tests due to the limitations of bootstrapping, but I am really a newbie in the field of statistics.

Any help?